Publications

A list of selected papers in which research team members participated.

For a full list see below or go to Google Scholar (Jisun An and Haewoon Kwak).

computational journalism

political science

network science

game analytics

AI/ML/NLP

HCI

online harm

dataset/tool

bias/fairness

user engagement

AI/ML/NLP

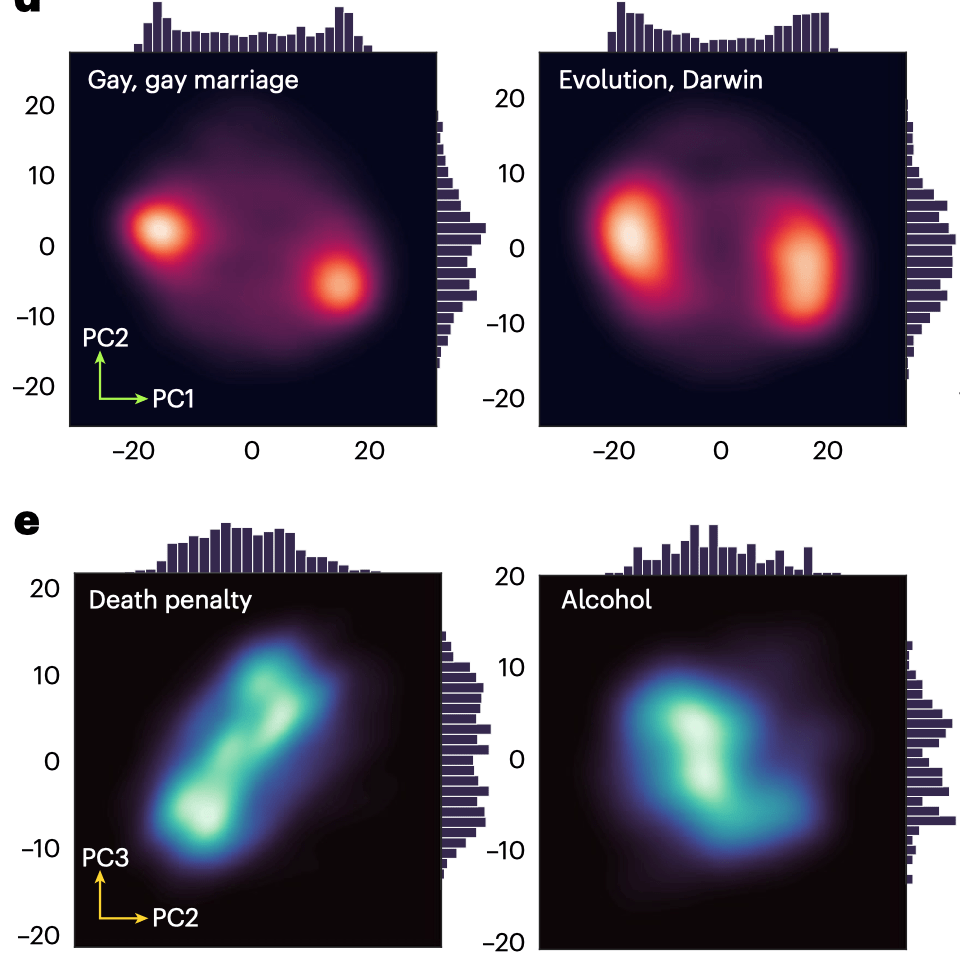

How are our beliefs formed and how do they influence each other? By combining large language models (LLMs) with collective online records of human belief, we created a “map” showing how thousands of beliefs relate to one another. This model allows us to predict a person’s next belief, measure potential cognitive dissonance, and ultimately understand the core principles governing human cognition.

Byunghwee Lee, Rachith Aiyappa, Yong-Yeol Ahn, Haewoon Kwak, Jisun An

Press coverage - Phys.org Featured Article

computational journalism AI/ML/NLP online harm bias/fairness

How can we spot fake news? We go beyond single articles to evaluate the credibility of entire news outlets. Discover the present and future of new technologies that can rapidly identify sources of disinformation by jointly analyzing their factuality and political bias.

Preslav Nakov, Jisun An, Haewoon Kwak, Muhammad Arslan Manzoor, Zain Muhammad Mujahid, Husrev Taha Sencar

AI/ML/NLP

How can we quickly and accurately compare the knowledge graphs that structure a sentence’s meaning? Existing metrics have limitations, often failing to capture true semantic similarity while being computationally expensive. We developed REMATCH, a new and efficient metric that addresses this by extracting core semantic elements called ‘motifs’ and comparing their collective sets. REMATCH measures semantic similarity 1–5 percentage points more accurately than previous state-of-the-art metrics, and it is five times faster. This model can play a key role in building more sophisticated natural language understanding systems and directly benefits downstream applications that rely on analyzing semantic relationships between sentences.

Zoher Kachwala, Jisun An, Haewoon Kwak, Filippo Menczer

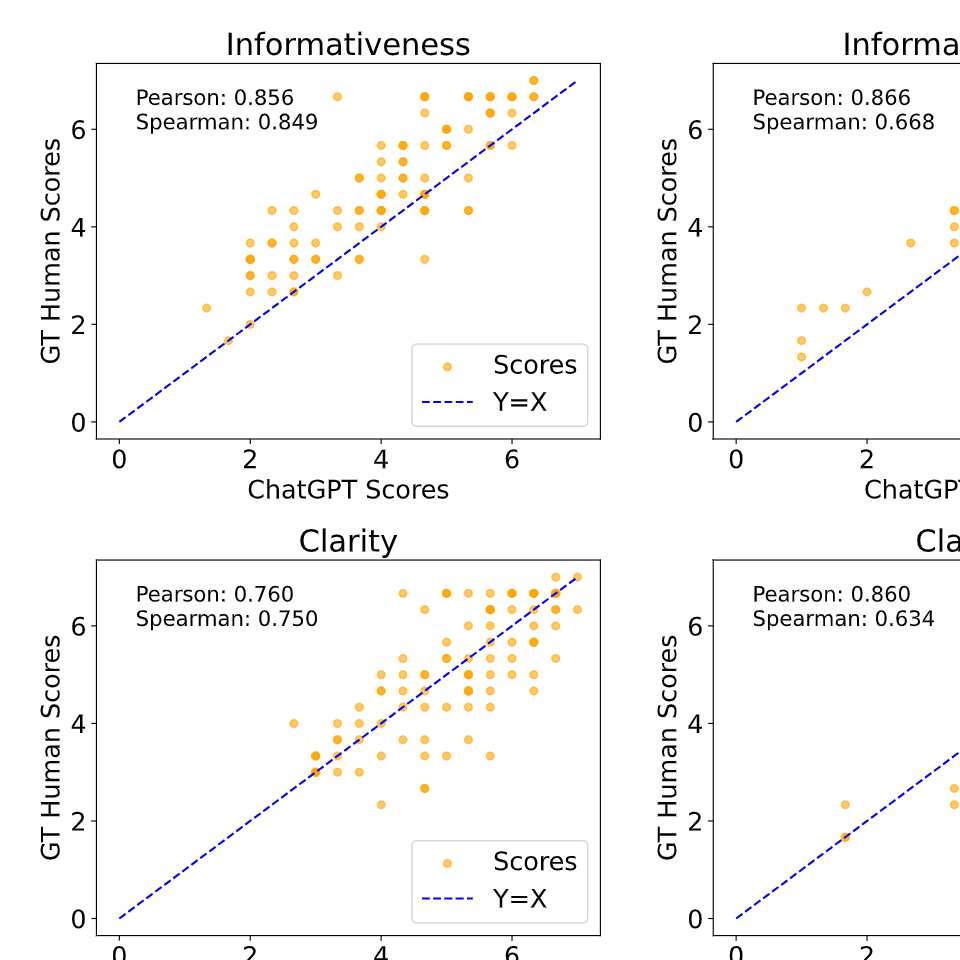

AI/ML/NLP HCI

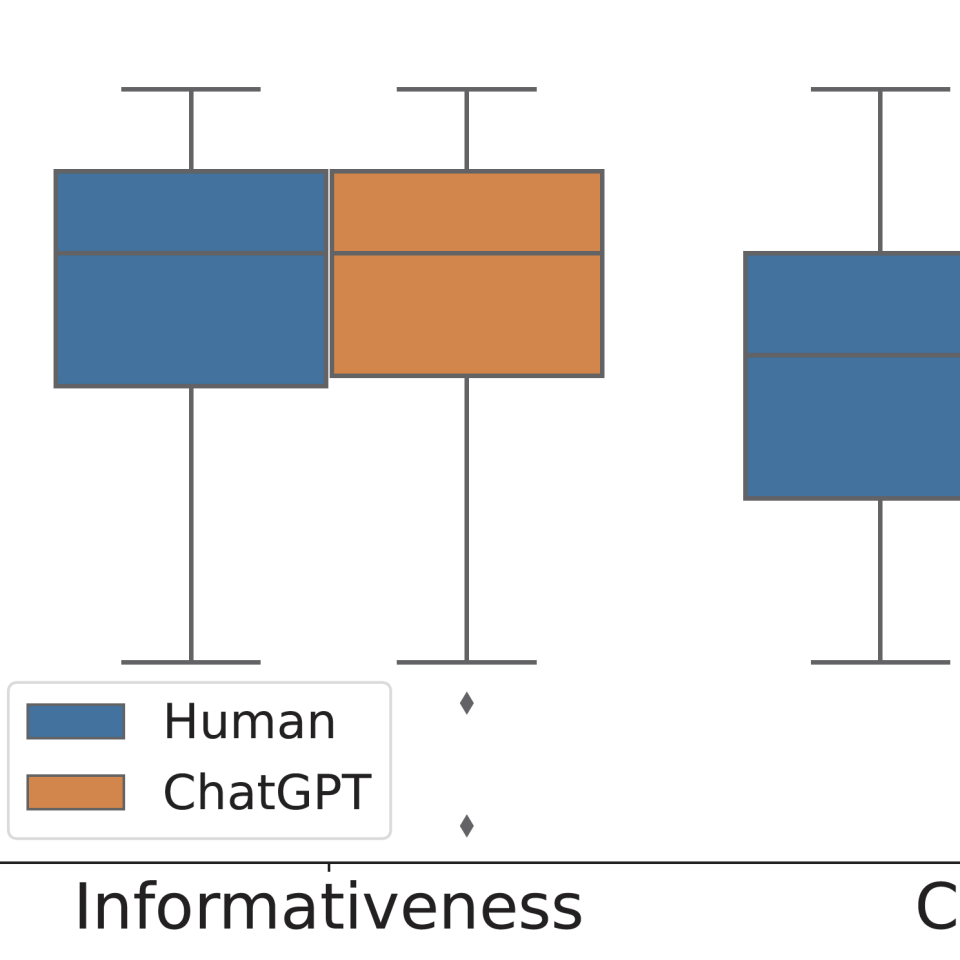

How can we evaluate the quality of Natural Language Explanations for decisions made by AI? Direct human evaluation is accurate, but it is a difficult, time-consuming, and expensive process. We experimented to see if ChatGPT can evaluate AI explanations for ‘informativeness’ and ‘clarity’ like expert annotators, and how its judgment aligns with humans across various scales. The results show that ChatGPT performs very similarly to humans when evaluating explanations into broad categories like “good/bad,” but struggles to assign fine-grained scores from 1 to 7. Notably, in ‘pairwise comparison’ tasks—judging which of two explanations is better—it demonstrated high accuracy comparable to human experts. This research shows that Large Language Models can be used as reliable and efficient tools to supplement human evaluators under specific conditions, which can accelerate the development of transparent and responsible AI systems.

Fan Huang, Haewoon Kwak, Kunwoo Park, Jisun An

AI/ML/NLP

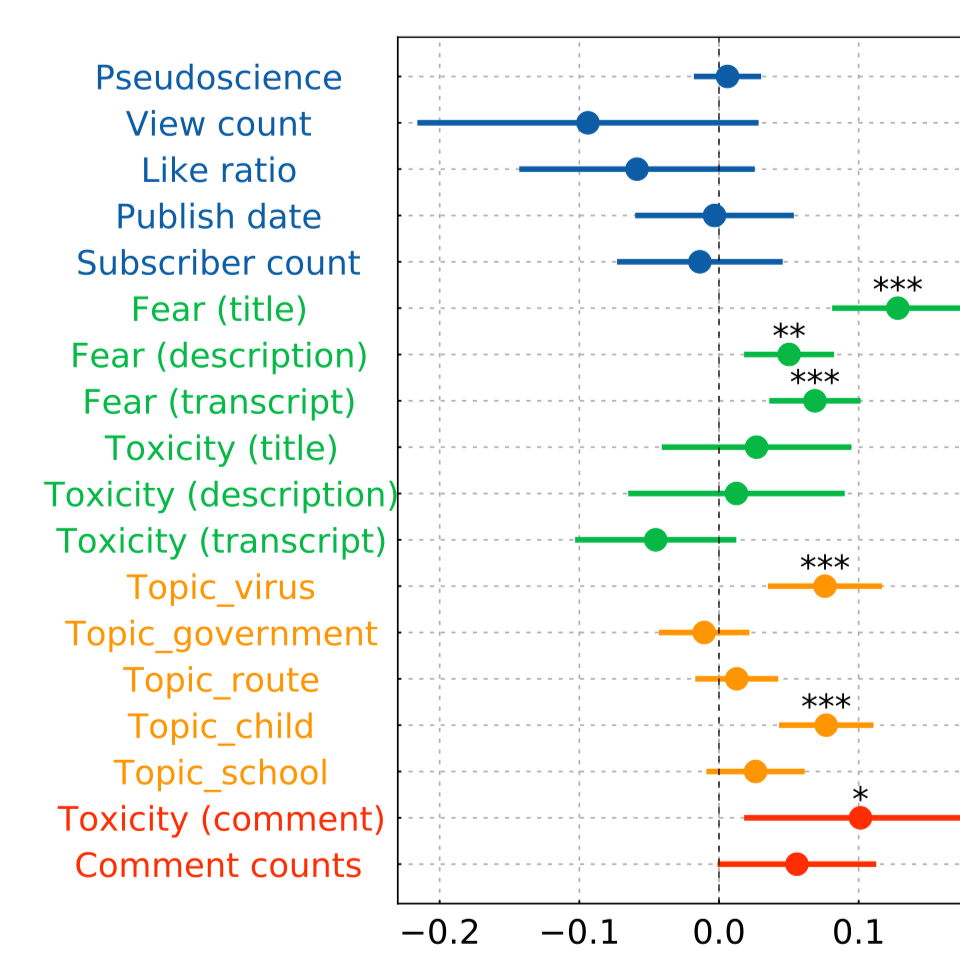

What is the true impact of toxic trolling in the comment sections of anti-vaccine YouTube videos? While it’s widely believed that such comments spread fear and fuel vaccine hesitancy, there has been little empirical evidence to measure this effect. Our latest study tackles this question by analyzing the complex interplay between toxicity and fear across 484 anti-vaccine videos and more than 414,000 of their comments. Using machine learning to score each comment, we found a significant link between the overall toxicity of a video’s comment section and the level of fear expressed within it. More importantly, we discovered a powerful contagion effect; the toxicity of highly liked early comments was significantly associated with a rise in fear in subsequent comments. This influence was also found to be bidirectional, as highly liked fearful comments were linked to an increase in later toxicity.

Kunihiro Miyazaki, Takayuki Uchiba, Haewoon Kwak, Jisun An, Kazutoshi Sasahara

AI/ML/NLP

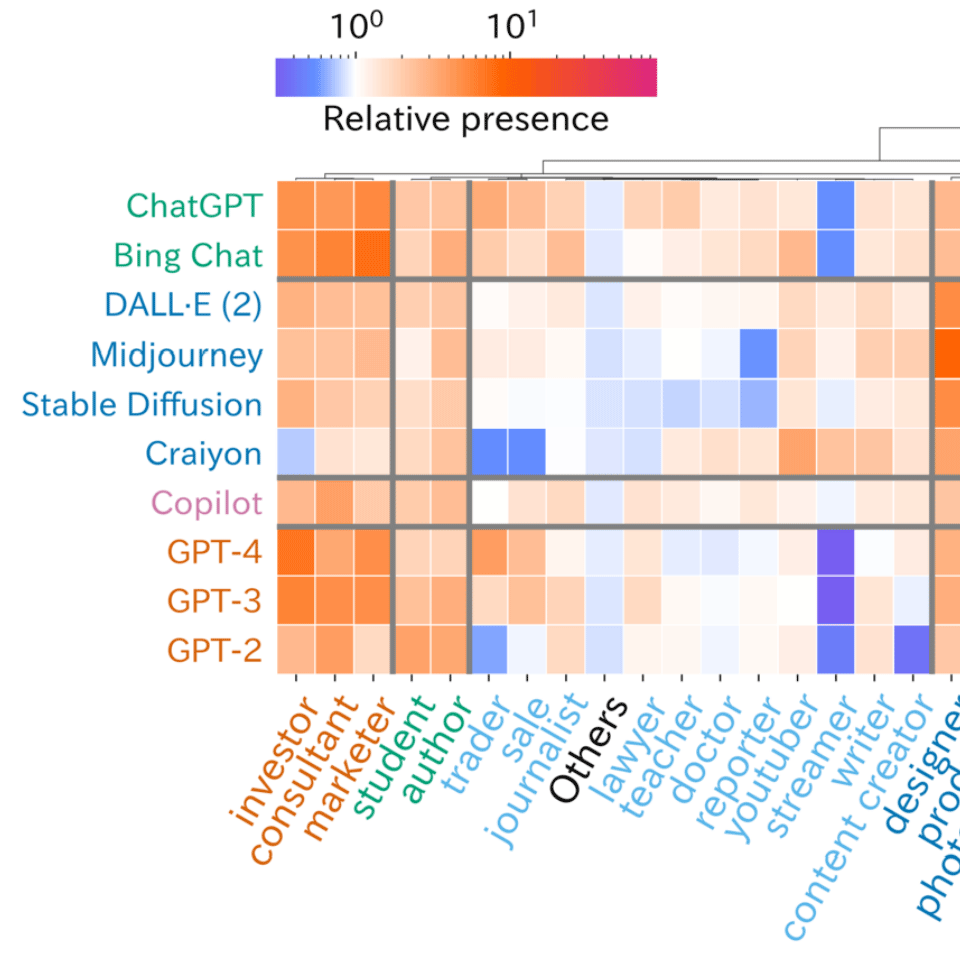

The emergence of generative AI has sparked substantial discussions, with the potential to have profound impacts on society in all aspects. As emerging technologies continue to advance, it is imperative to facilitate their proper integration into society, managing expectations and fear. This paper investigates users’ perceptions of generative AI using 3M posts on Twitter from January 2019 to March 2023, especially focusing on their occupation and usage. We find that people across various occupations, not just IT-related ones, show a strong interest in generative AI. The sentiment toward generative AI is generally positive, …

Kunihiro Miyazaki, Taichi Murayama, Takayuki Uchiba, Jisun An, Haewoon Kwak

Press coverage-Blockchain News

AI/ML/NLP

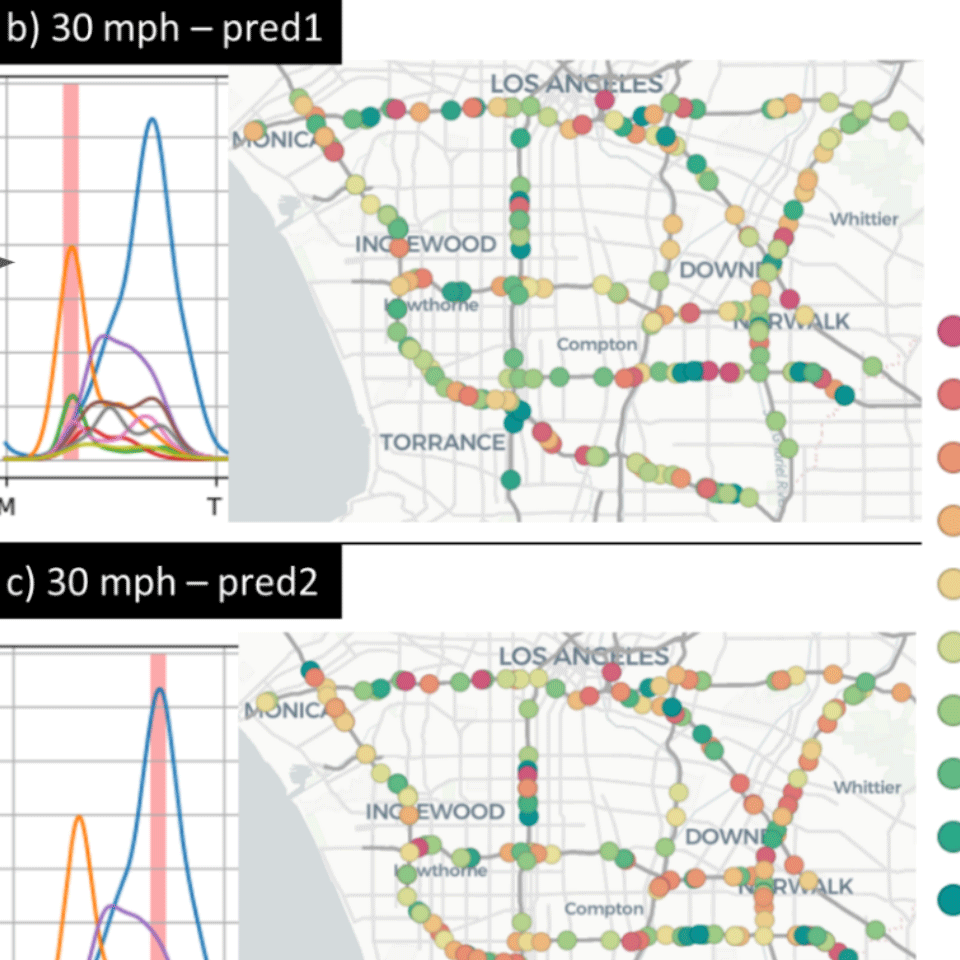

Traffic prediction is one of the key elements to ensure the safety and convenience of citizens. Existing traffic prediction models primarily focus on deep learning architectures to capture spatial and temporal correlation. They often overlook the underlying nature of traffic. Specifically, the sensor networks in most traffic datasets do not accurately represent the actual road network exploited by vehicles, failing to provide insights into the traffic patterns in urban activities. To overcome these limitations, we propose an improved traffic prediction method based on graph convolution deep learning algorithms. …

Sumin Han, Youngjun Park, Minji Lee, Jisun An, Dongman Lee

AI/ML/NLP

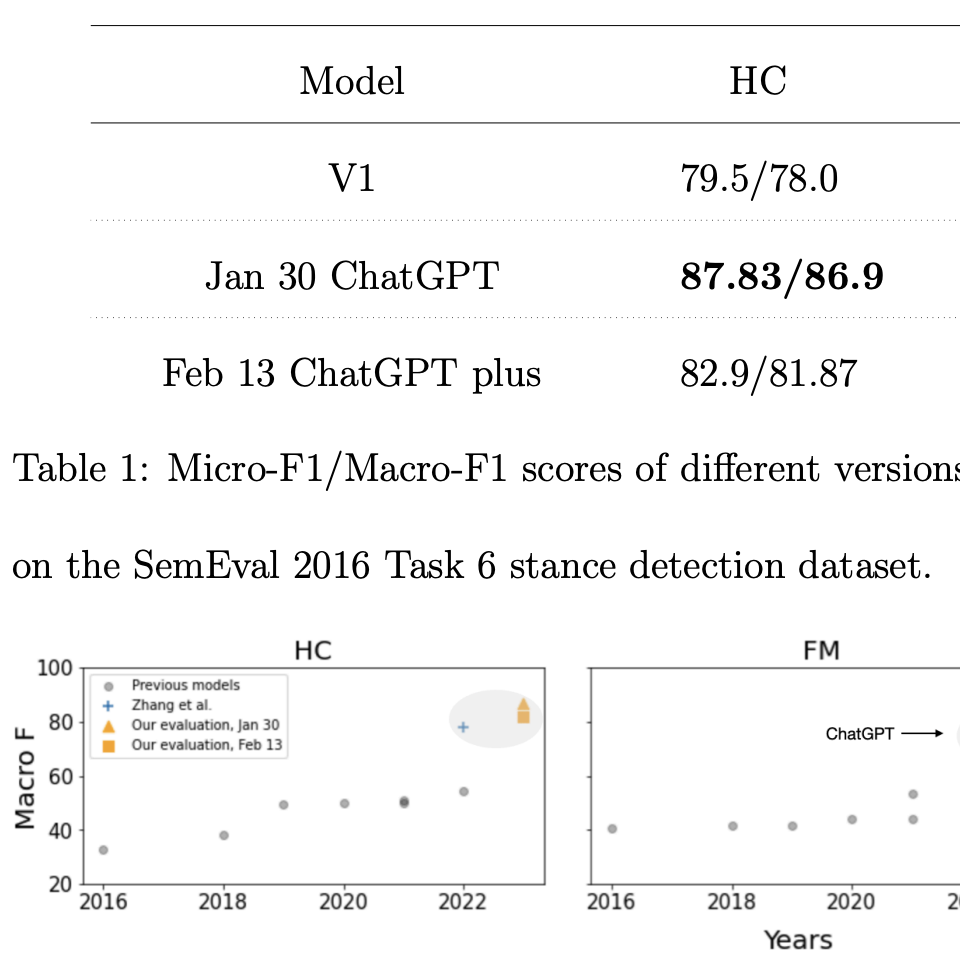

ChatGPT, the first large language model (LLM) with mass adoption, has demonstrated remarkable performance in numerous natural language tasks. Despite its evident usefulness, evaluating ChatGPT’s performance in diverse problem domains remains challenging due to the closed nature of the model and its continuous updates via Reinforcement Learning from Human Feedback (RLHF). We highlight the issue of data contamination in ChatGPT evaluations, with a case study of the task of stance detection. We discuss the challenge of preventing data contamination and ensuring fair model evaluation in the age of closed and continuously trained models.

Rachith Aiyappa, Jisun An, Haewoon Kwak, Yong-Yeol Ahn

TrustNLP (Collocated with ACL), 2023

AI/ML/NLP

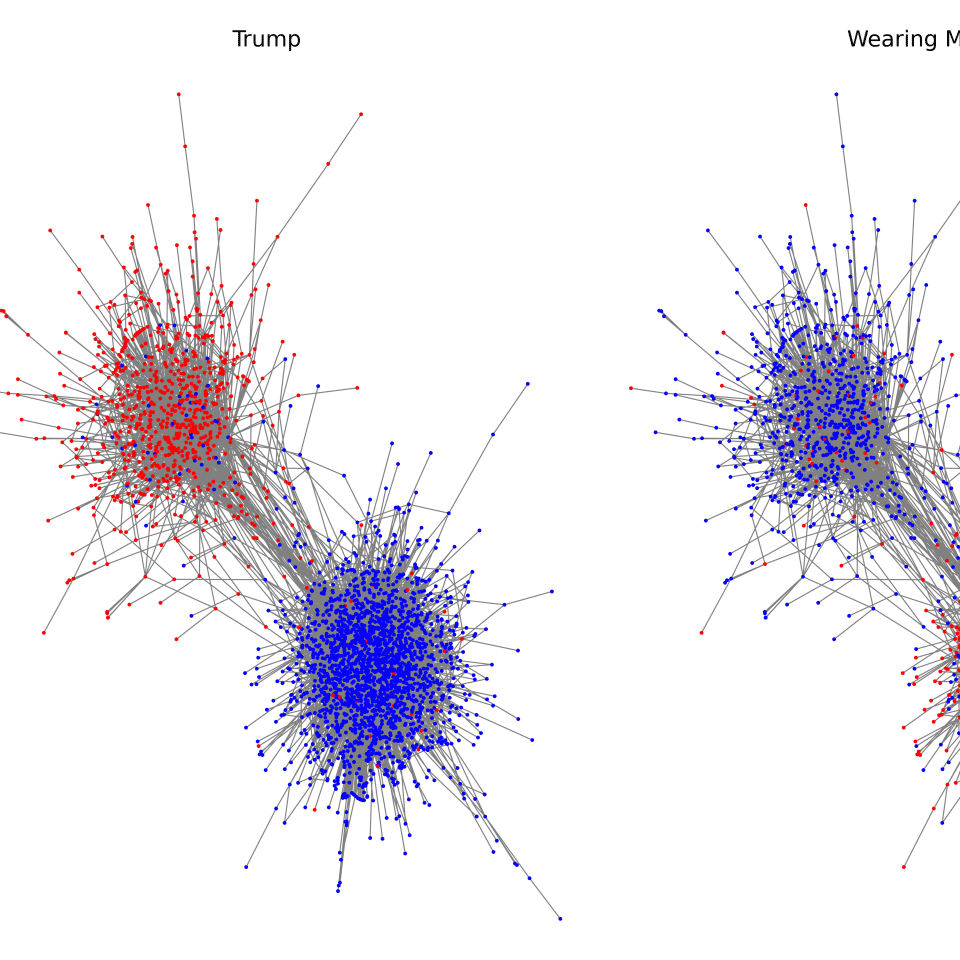

People who share similar opinions towards controversial topics could form an echo chamber and may share similar political views toward other topics as well. The existence of such connections, which we call connected behavior, gives researchers a unique opportunity to predict how one would behave for a future event given their past behaviors. In this work, we propose a framework to conduct connected behavior analysis. Neural stance detection models are trained on Twitter data collected on three seemingly independent topics, i.e., wearing a mask, racial equality, and Trump, to detect people’s stance, …

Hong Zhang, Haewoon Kwak, Wei Gao, Jisun An

AI/ML/NLP online harm

Recent studies have alarmed that many online hate speeches are implicit. With its subtle nature, the explainability of the detection of such hateful speech has been a challenging problem. In this work, we examine whether ChatGPT can be used for providing natural language explanations (NLEs) for implicit hateful speech detection. We design our prompt to elicit concise ChatGPT-generated NLEs and conduct user studies to evaluate their qualities by comparison with human-generated NLEs. We discuss the potential and limitations of ChatGPT in the context of implicit hateful speech research.

Fan Huang, Haewoon Kwak, Jisun An

AI/ML/NLP online harm

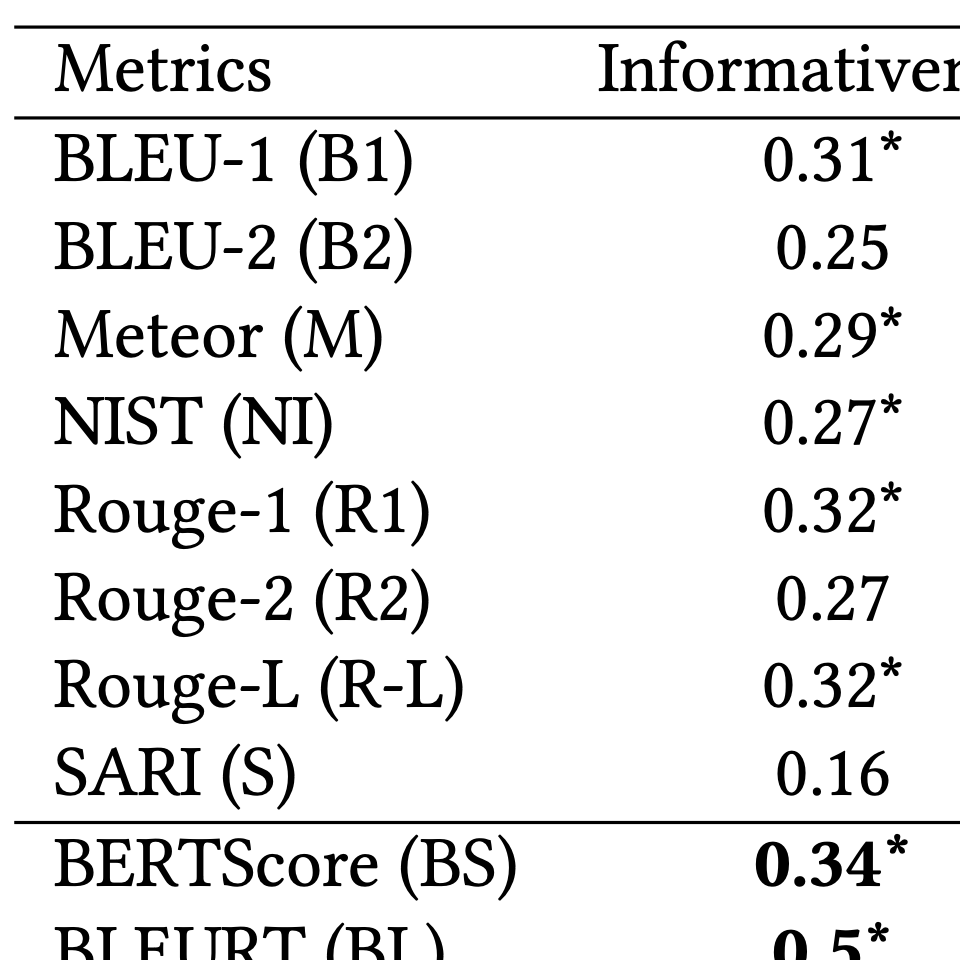

Recent studies have exploited advanced generative language models to generate Natural Language Explanations (NLE) for why a certain text could be hateful. We propose the Chain of Explanation (CoE) Prompting method, using the heuristic words and target group, to generate high-quality NLE for implicit hate speech. We improved the BLUE score from 44.0 to 62.3 for NLE generation by providing accurate target information. We then evaluate the quality of generated NLE using various automatic metrics and human annotations of informativeness and clarity scores.

Fan Huang, Haewoon Kwak, Jisun An

AI/ML/NLP political science online harm

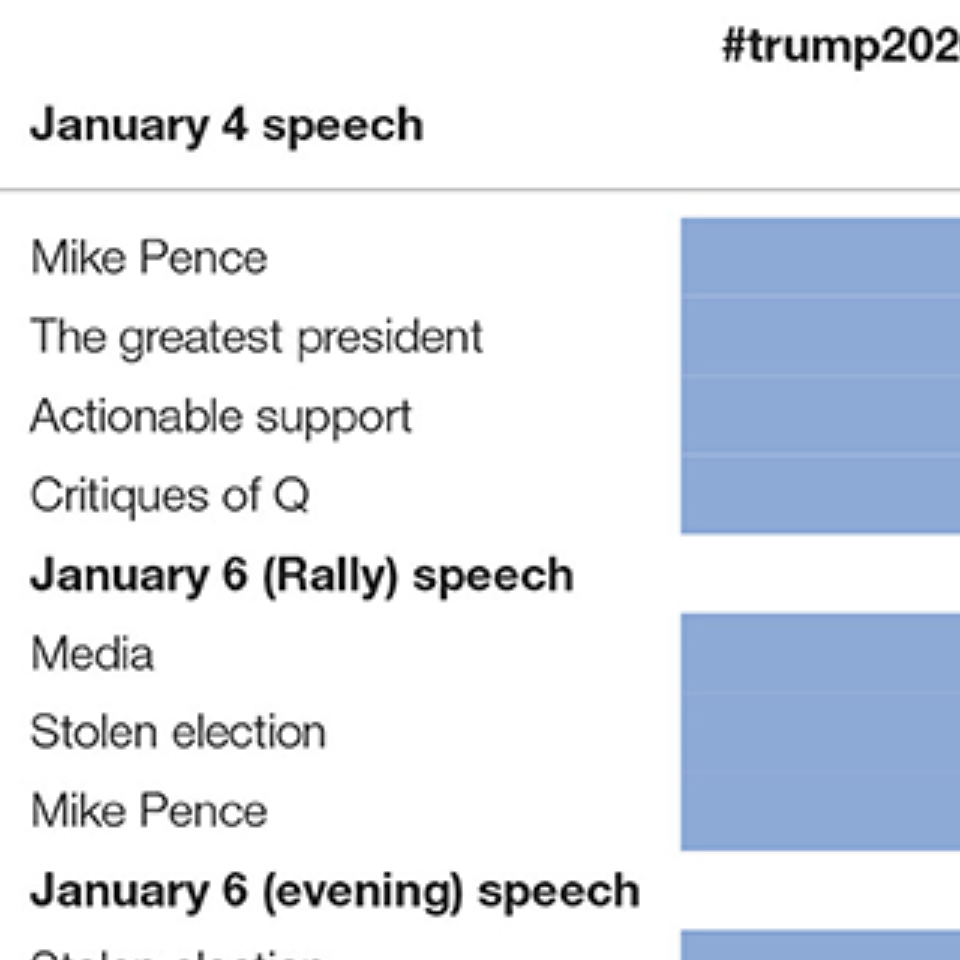

The transfer of power stemming from the 2020 presidential election occurred during an unprecedented period in United States history. Uncertainty from the COVID-19 pandemic, ongoing societal tensions, and a fragile economy increased societal polarization, exacerbated by the outgoing president’s offline rhetoric. As a result, online groups such as QAnon engaged in extra political participation beyond the traditional platforms. This research explores the link between offline political speech and online extra-representational participation by examining Twitter within the context of the January 6 insurrection. Using a mixed-methods approach of quantitative and qualitative thematic analyses, the study combines offline speech information with Twitter data during key speech addresses leading up to the date of the insurrection; exploring the link between Trump’s offline speeches and QAnon’s hashtags across a 3-day timeframe. We find that links between online extra-representational participation and offline political speech exist. This research illuminates this phenomenon and offers policy implications for the role of online messaging as a tool of political mobilization.

Claire Seungeun Lee, Juan Merizalde, John D. Colautti, Jisun An and Haewoon Kwak

Press coverage-PsyPost

AI/ML/NLP online harm

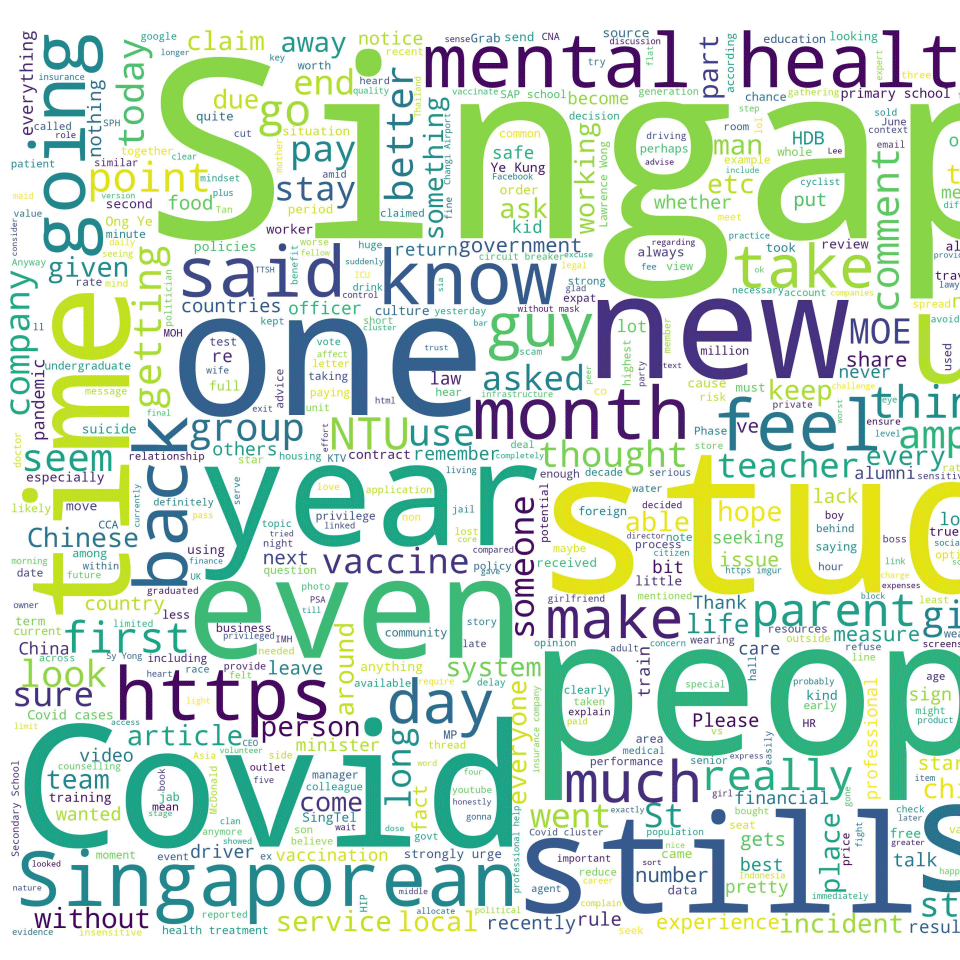

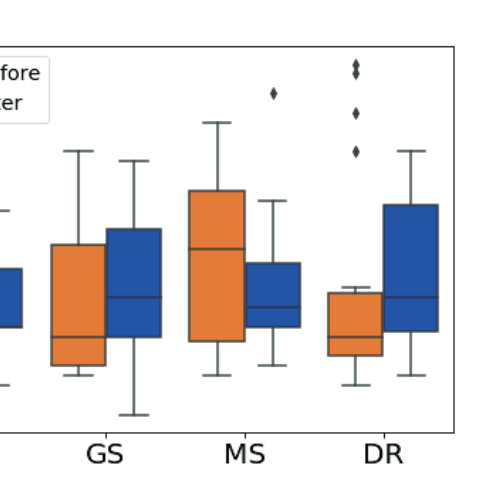

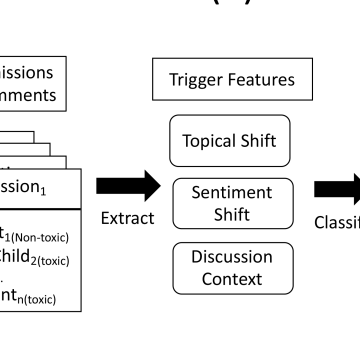

While the contagious nature of online toxicity sparked increasing interest in its early detection and prevention, most of the literature focuses on the Western world. In this work, we demonstrate that 1) it is possible to detect toxicity triggers in an Asian online community, and 2) toxicity triggers can be strikingly different between Western and Eastern contexts.

Yun Yu Chong, Haewoon Kwak

Proceedings of the 16th International AAAI Conference on Web and Social Media (ICWSM), 2022 (short)

Press coverage-AI Ethics Brief Newsletter by Montreal AI Ethics Institute

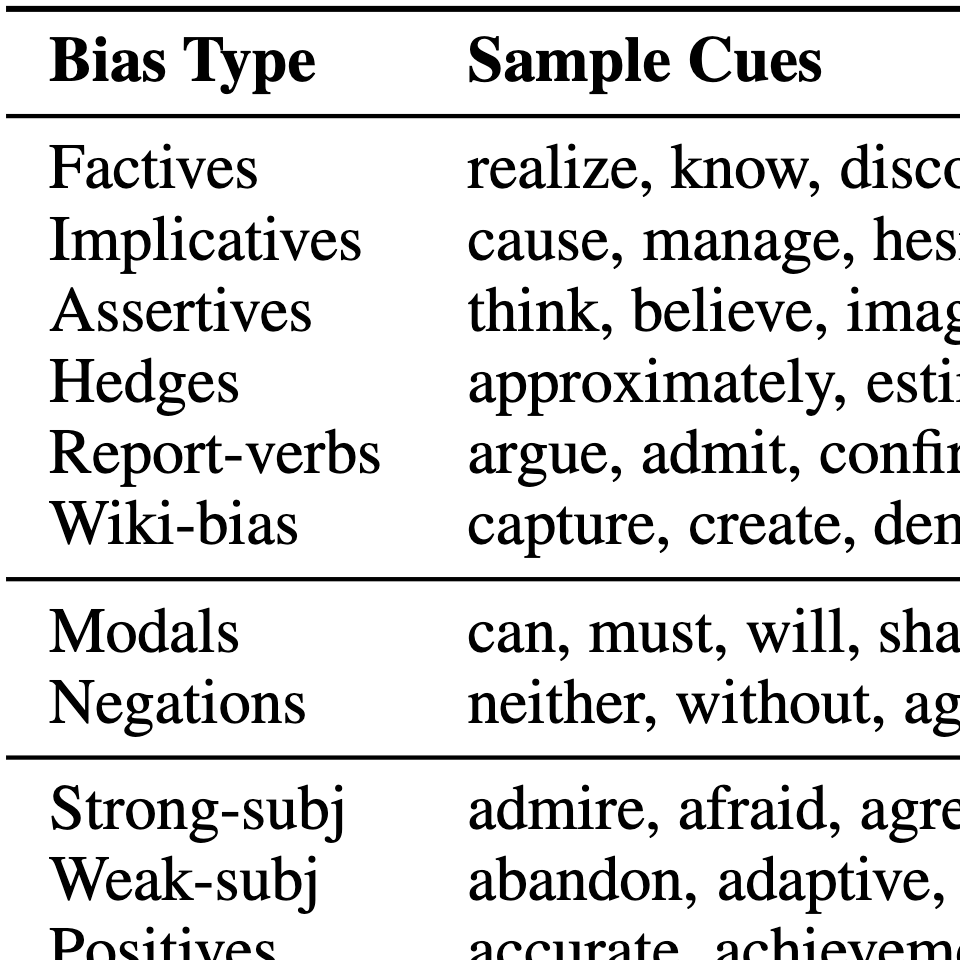

AI/ML/NLP dataset/tool bias/fairness

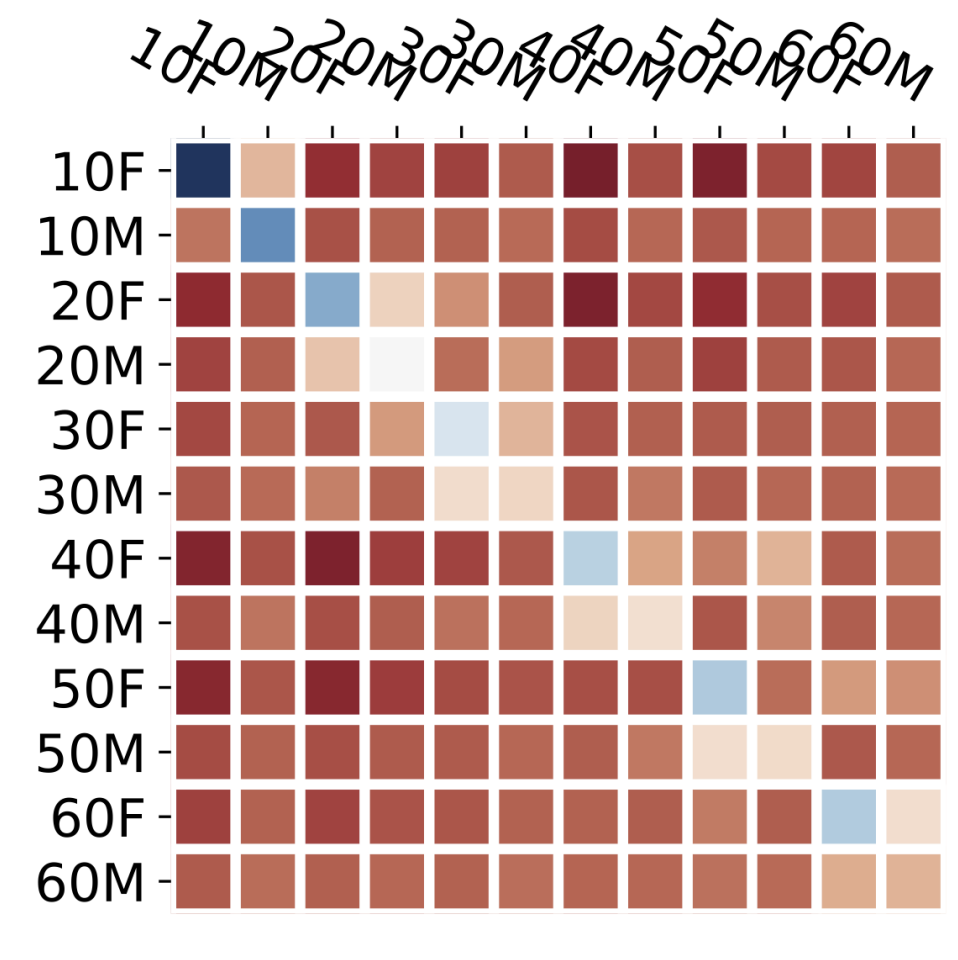

A conversation corpus is essential to build interactive AI applications. However, the demographic information of the participants in such corpora is largely underexplored mainly due to the lack of individual data in many corpora. In this work, we analyze a Korean nationwide daily conversation corpus constructed by the National Institute of Korean Language (NIKL) to characterize the participation of different demographic (age and sex) groups in the corpus.

Haewoon Kwak, Jisun An, Kunwoo Park

Proceedings of the 16th International AAAI Conference on Web and Social Media (ICWSM), 2022 (short)

AI/ML/NLP online harm

We investigate predictors of anti-Asian hate among Twitter users throughout COVID-19. With the rise of xenophobia and polarization that has accompanied widespread social media usage in many nations, online hate has become a major social issue, attracting many researchers. Here, we apply natural language processing techniques to characterize social media users who began to post anti-Asian hate messages during COVID-19. We compare two user groups – those who posted anti-Asian slurs and those who did not – with respect to a rich set of features measured with data prior to COVID-19 and show that it is possible to predict who later publicly posted anti-Asian slurs. …

Jisun An, Haewoon Kwak, Claire Seungeun Lee, Bogang Jun, Yong-Yeol Ahn

Findings of the Association for Computational Linguistics EMNLP 2021

AI/ML/NLP dataset/tool bias/fairness

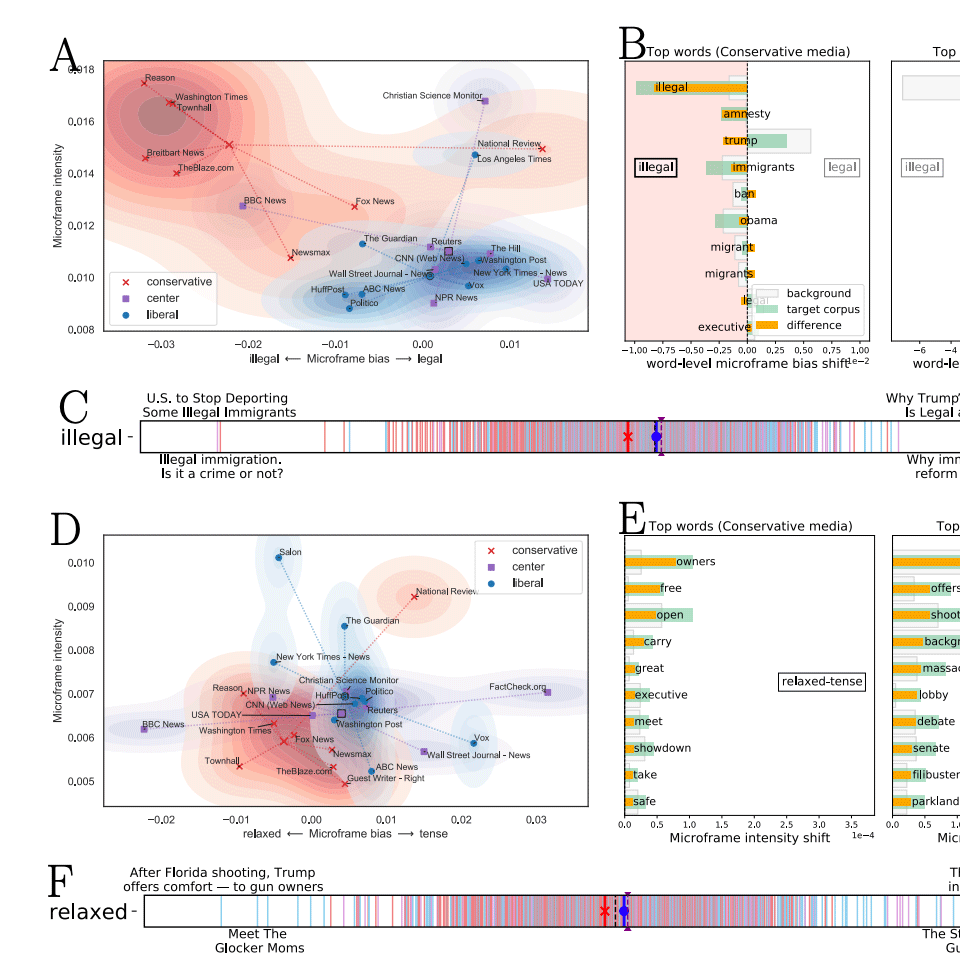

Framing is a process of emphasizing a certain aspect of an issue over the others, nudging readers or listeners towards different positions on the issue even without making a biased argument. Here, we propose FrameAxis, a method for characterizing documents by identifying the most relevant semantic axes (“microframes”) that are overrepresented in the text using word embedding. Our unsupervised approach can be readily applied to large datasets because it does not require manual annotations. …

Haewoon Kwak, Jisun An, Elise Jing, Yong-Yeol Ahn

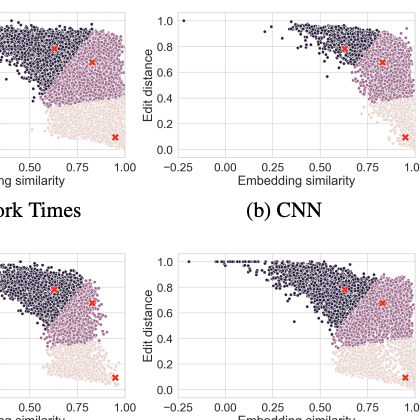

computational journalism AI/ML/NLP user engagement

We first build a parallel corpus of original news articles and their corresponding tweets that were shared by eight media outlets. Then, we explore how those media edited tweets against original headlines, and the effects would be..

Kunwoo Park, Haewoon Kwak, Jisun An, Sanjay Chawla

Proceedings of the 15th International AAAI Conference on Web and Social Media (ICWSM), 2021

computational journalism AI/ML/NLP bias/fairness

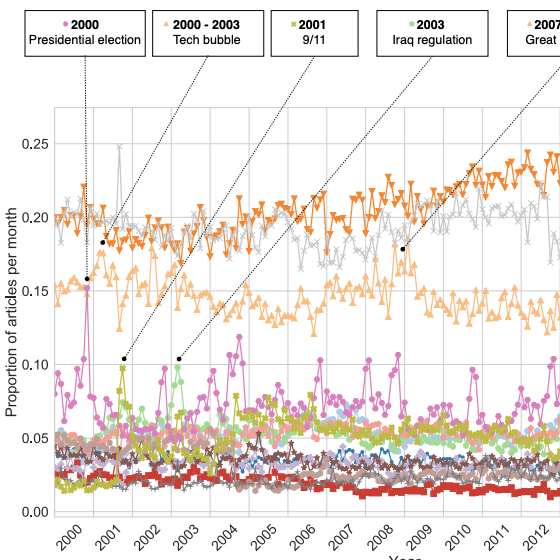

Framing is an indispensable narrative device for news media because even the same facts may lead to conflicting understandings if deliberate framing is employed. By developing a media frame classifier that achieves state-of-the-art performance, we systematically analyze the media frames of 1.5 million New York Times articles published from 2000 to 2017.

Haewoon Kwak, Jisun An, Yong-Yeol Ahn

Proceedings of the 12th ACM Conference on Web Science (WebSci), 2020

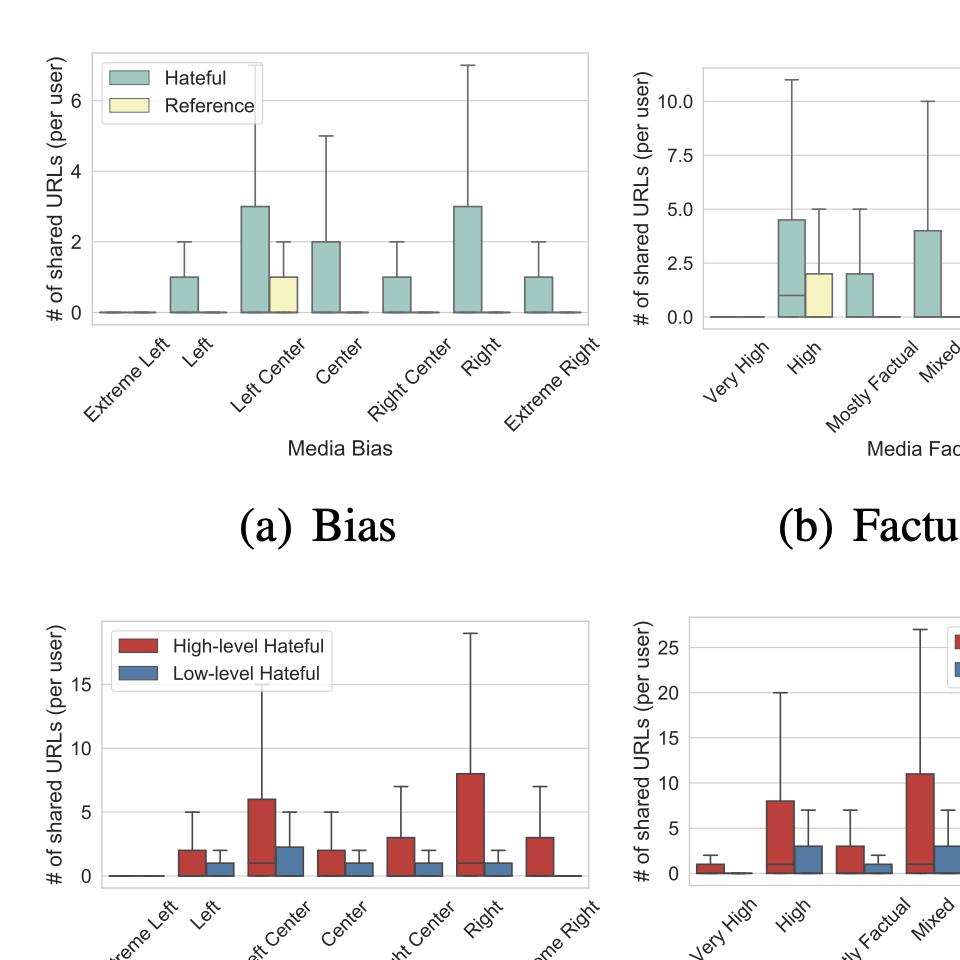

computational journalism AI/ML/NLP bias/fairness

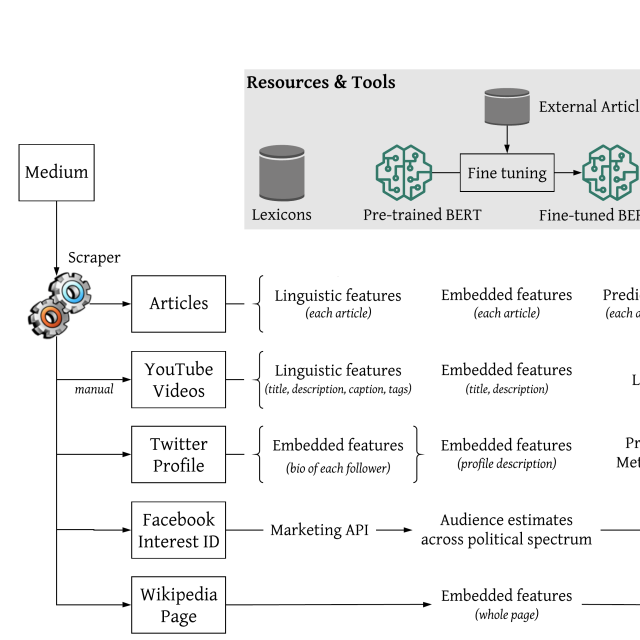

Predicting the political bias and the factuality of reporting of entire news outlets are critical elements of media profiling, which is an understudied but an increasingly important research direction. The present level of proliferation of fake, biased, and propagandistic content online has made it impossible to fact-check every single suspicious claim, either manually or automatically. Thus, it has been proposed to profile entire news outlets and to look for those that are likely to publish fake or biased content. This makes it possible to detect likely “fake news” the moment they are published, by simply checking the reliability of their source. From a practical perspective, political bias and factuality of reporting have a linguistic aspect but also a social context.

Ramy Baly, Georgi Karadzhov, Jisun An, Haewoon Kwak, Yoan Dinkov, Ahmed Ali, James Glass, Preslav Nakov

Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL) (2020)

computational journalism AI/ML/NLP dataset/tool bias/fairness

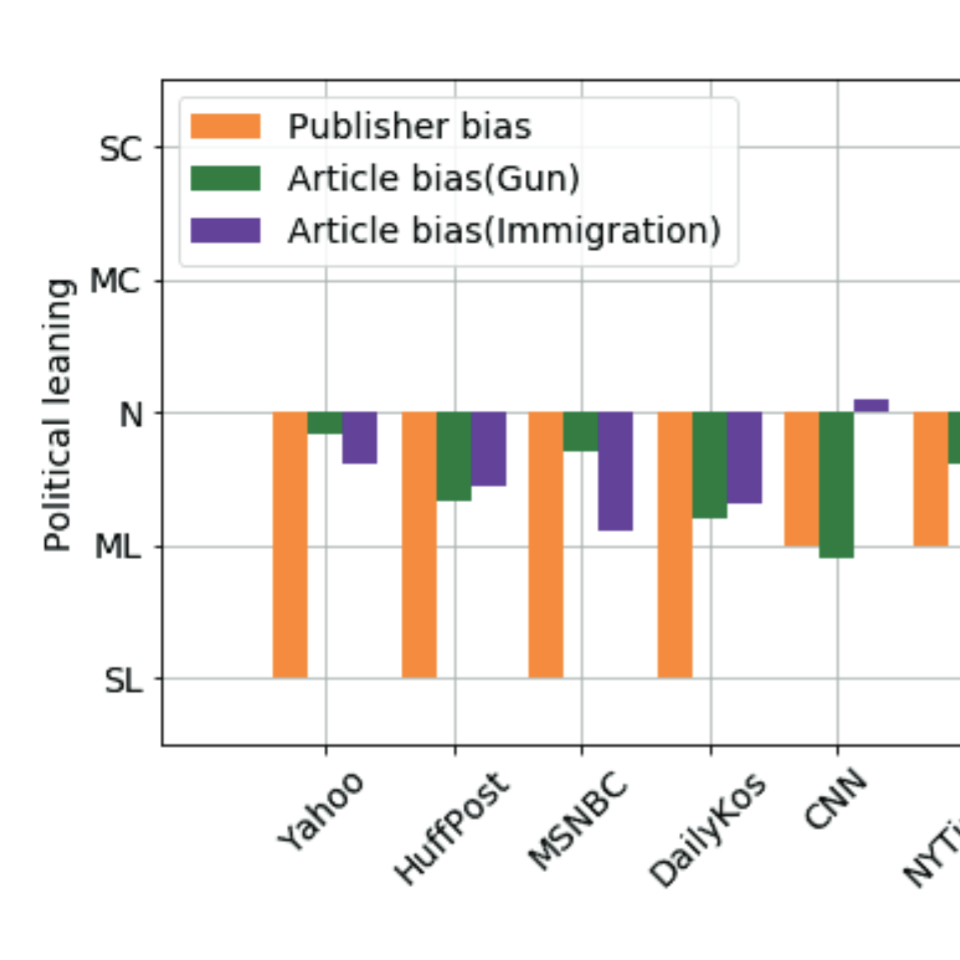

We empirically validate three common assumptions in building political media bias datasets, which are (i) labelers’ political leanings do not affect labeling tasks, (ii) news articles follow their source outlet’s political leaning, and (iii) political leaning of a news outlet is stable across different topics.

Soumen Ganguly, Juhi Kulshrestha, Jisun An, Haewoon Kwak

Proceedings of the 14th International AAAI Conference on Web and Social Media (ICWSM), 2020

AI/ML/NLP user engagement

We study two Reddit communities that adopted this scheme, whereby posts include tags identifying education status referred to as flairs, and we examine how the “transferred” social status affects the interactions among the users.

Kunwoo Park, Haewoon Kwak, Hyunho Song, Meeyoung Cha

Proceedings of the 14th International AAAI Conference on Web and Social Media (ICWSM), 2020

AI/ML/NLP online harm

We define toxicity triggers in online discussions as a non-toxic comment that lead to toxic replies. Then, we build a neural network-based prediction model for toxicity trigger.

Hind Almerekhi, Haewoon Kwak, Bernard Jim Jansen, Joni Salminen (short)

Proceedings of The Web Conference (WWW), 2020

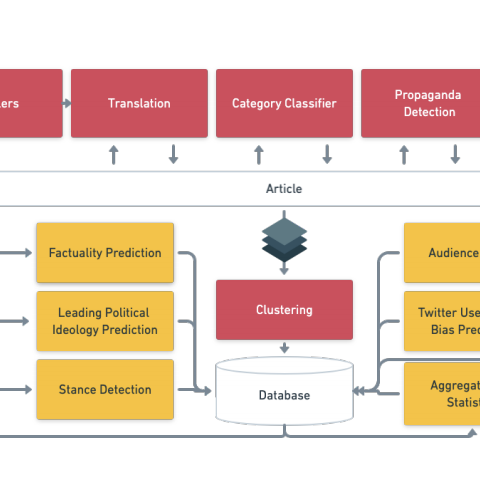

computational journalism AI/ML/NLP bias/fairness dataset/tool

We introduce Tanbih, a news aggregator with intelligent analysis tools to help readers understanding what’s behind a news story. Our system displays news grouped into events and generates media profiles that show the general factuality of reporting, the degree of propagandistic content, hyper-partisanship, leading political ideology, general frame of reporting, and stance with respect to various claims and topics of a news outlet.

Yifan Zhang, Giovanni Da San Martino, Alberto Barrón-Cedeño, Salvatore Romeo, Jisun An, Haewoon Kwak, Todor Staykovski, Israa Jaradat, Georgi Karadzhov, Ramy Baly, Kareem Darwish, James Glass, Preslav Nakov (demo)

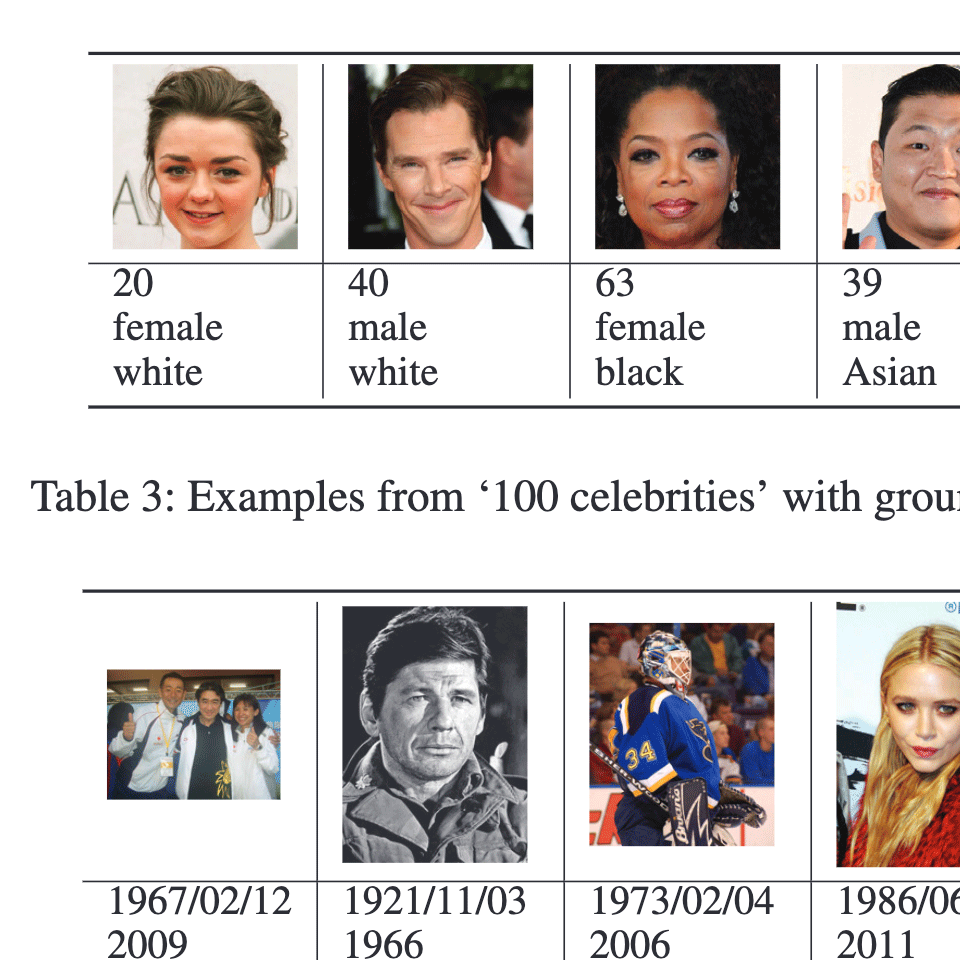

AI/ML/NLP dataset/tool bias/fairness

We evaluate four widely used face detection tools, which are Face++, IBM Bluemix Visual Recognition, AWS Rekognition, and Microsoft Azure Face API, using multiple datasets to determine their accuracy in inferring user attributes, including gender, race, and age.

Soon-gyo Jung, Jisun An, Haewoon Kwak, Joni Salminen, Bernard Jim Jansen

Proceedings of the 12th International AAAI Conference on Web and Social Media (ICWSM), 2018 (short)

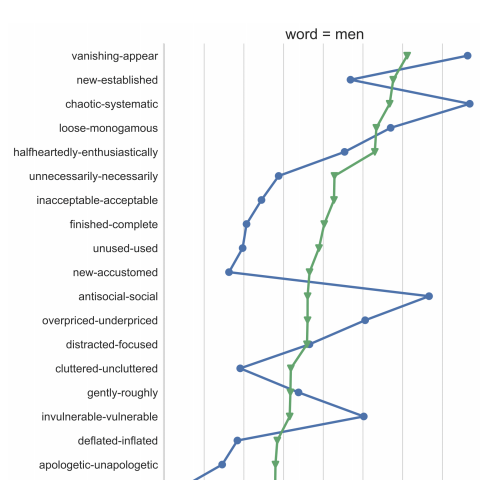

AI/ML/NLP dataset/tool

We propose SemAxis, a simple yet powerful framework to characterize word semantics using many semantic axes in word-vector spaces beyond sentiment. We demonstrate that SemAxis can capture nuanced semantic representations in multiple online communities. We also show that, when the sentiment axis is examined, SemAxis outperforms the state-of-the-art approaches in building domain-specific sentiment lexicons.

Jisun An, Haewoon Kwak, Yong-Yeol Ahn

Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), 2018

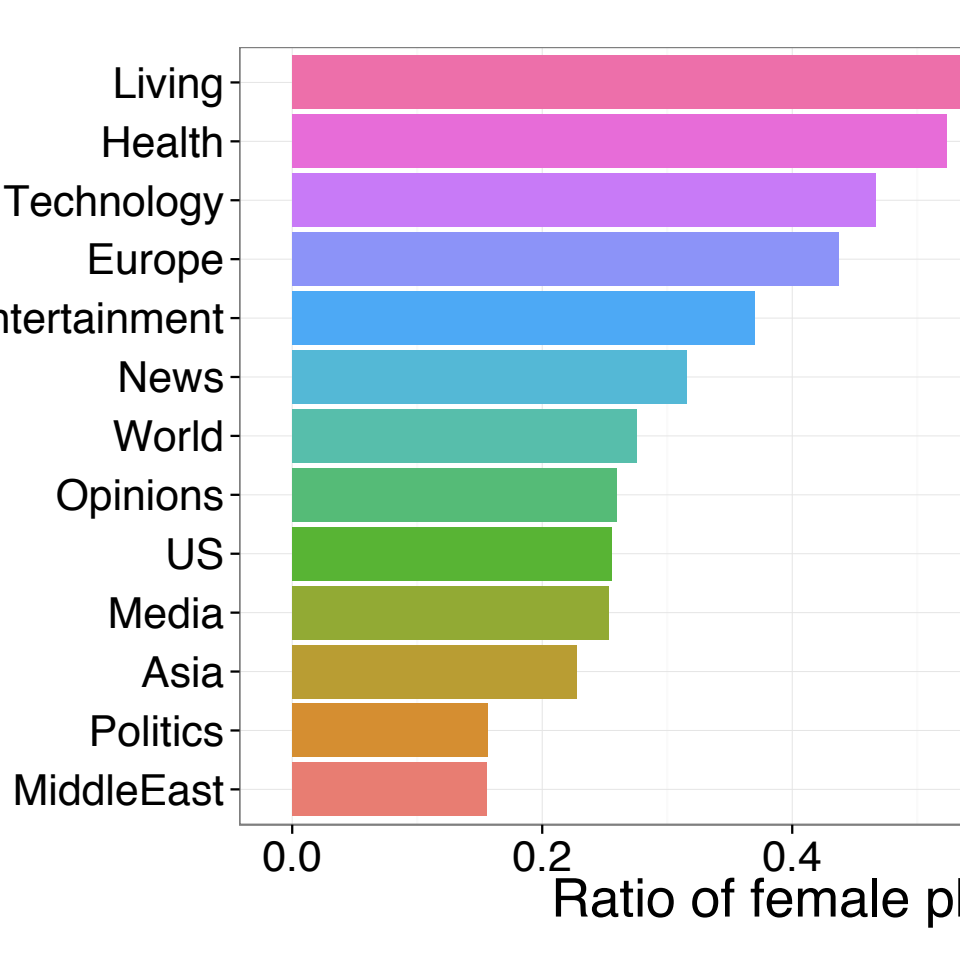

computational journalism AI/ML/NLP bias/fairness dataset/tool

In this work, we analyze more than two million news photos published in January 2016. We demonstrate i) which objects appear the most in news photos; ii) what the sentiments of news photos are; iii) whether the sentiment of news photos is aligned with the tone of the text; iv) how gender is treated; and v) how differently political candidates are portrayed. To our best knowledge, this is the first large-scale study of news photo contents using deep learning-based vision APIs.

Haewoon Kwak, Jisun An

ICWSM Workshop on NEws and publiC Opinion (NECO), 2016

Picked as The Best of the Physics arXiv (week ending March 26, 2016) in MIT Technology Review

Full List

A semantic embedding space based on large language models for modelling human beliefs

Byunghwee Lee, Rachith Aiyappa, Yong-Yeol Ahn, Haewoon Kwak, Jisun An

Nature Human Behaviour, 2025

Press coverage - Phys.org Featured Article

View-only without paywall (ReadCube)

A Survey on Predicting the Factuality and the Bias of News Media

Preslav Nakov, Jisun An, Haewoon Kwak, Muhammad Arslan Manzoor, Zain Muhammad Mujahid, Husrev Taha Sencar

ACL Findings, 2024

15+ papers citing this work (Google scholar)

Rematch: Robust and Efficient Matching of Local Knowledge Graphs to Improve Structural and Semantic Similarity

Zoher Kachwala, Jisun An, Haewoon Kwak, Filippo Menczer

NAACL Findings, 2024

ChatGPT Rates Natural Language Explanation Quality Like Humans: But on Which Scales?

Fan Huang, Haewoon Kwak, Kunwoo Park, Jisun An

LREC-COLING, 2024

15+ papers citing this work (Google scholar)

The Impact of Toxic Trolling Comments on Anti-vaccine YouTube Videos

Kunihiro Miyazaki, Takayuki Uchiba, Haewoon Kwak, Jisun An, Kazutoshi Sasahara

Scientific Reports, 2024

Public Perception of Generative AI on Twitter: An Empirical Study Based on Occupation and Usage

Kunihiro Miyazaki, Taichi Murayama, Takayuki Uchiba, Jisun An, Haewoon Kwak

EPJ Data Science, 2024

Press coverage-Blockchain News

35+ papers citing this work (Google scholar)

Enhancing Spatiotemporal Traffic Prediction through Urban Human Activity Analysis

Sumin Han, Youngjun Park, Minji Lee, Jisun An, Dongman Lee

ACM CIKM, 2023

Can We Trust the Evaluation on ChatGPT?

Rachith Aiyappa, Jisun An, Haewoon Kwak, Yong-Yeol Ahn

TrustNLP (Collocated with ACL), 2023

100+ papers citing this work (Google scholar)

Wearing Masks Implies Refuting Trump?: Towards Target-specific User Stance Prediction across Events in COVID-19 and US Election 2020

Hong Zhang, Haewoon Kwak, Wei Gao, Jisun An

ACM WebSci, 2023

5+ papers citing this work (Google scholar)

Is ChatGPT better than Human Annotators? Potential and Limitations of ChatGPT in Explaining Implicit Hate Speech

Fan Huang, Haewoon Kwak, Jisun An

WWW Companion, 2023

340+ papers citing this work (Google scholar)

Chain of Explanation: New Prompting Method to Generate Higher Quality Natural Language Explanation for Implicit Hate Speech

Fan Huang, Haewoon Kwak, Jisun An

WWW Companion, 2023

35+ papers citing this work (Google scholar)

Storm the Capitol: Linking Offline Political Speech and Online Twitter Extra-Representational Participation on QAnon and the January 6 Insurrection

Claire Seungeun Lee, Juan Merizalde, John D. Colautti, Jisun An and Haewoon Kwak

Frontiers in Sociology, 2022

Press coverage-PsyPost

35+ papers citing this work (Google scholar)

Understanding Toxicity Triggers on Reddit in the Context of Singapore

Yun Yu Chong, Haewoon Kwak

Proceedings of the 16th International AAAI Conference on Web and Social Media (ICWSM), 2022 (short)

Press coverage-AI Ethics Brief Newsletter by Montreal AI Ethics Institute

20+ papers citing this work (Google scholar)

Who Is Missing? Characterizing the Participation of Different Demographic Groups in a Korean Nationwide Daily Conversation Corpus

Haewoon Kwak, Jisun An, Kunwoo Park

Proceedings of the 16th International AAAI Conference on Web and Social Media (ICWSM), 2022 (short)

Predicting Anti-Asian Hateful Users on Twitter during COVID-19

Jisun An, Haewoon Kwak, Claire Seungeun Lee, Bogang Jun, Yong-Yeol Ahn

Findings of the Association for Computational Linguistics EMNLP 2021

Code repo (github)

30+ papers citing this work (Google scholar)

FrameAxis: characterizing microframe bias and intensity with word embedding

Haewoon Kwak, Jisun An, Elise Jing, Yong-Yeol Ahn

PeerJ Computer Science 7:e644, 2021

Code repo (github)

35+ papers citing this work (Google scholar)

How-to Present News on Social Media: A Causal Analysis of Editing News Headlines for Boosting User Engagement

Kunwoo Park, Haewoon Kwak, Jisun An, Sanjay Chawla

Proceedings of the 15th International AAAI Conference on Web and Social Media (ICWSM), 2021

15+ papers citing this work (Google scholar)

A Systematic Media Frame Analysis of 1.5 Million New York Times Articles from 2000 to 2017

Haewoon Kwak, Jisun An, Yong-Yeol Ahn

Proceedings of the 12th ACM Conference on Web Science (WebSci), 2020

40+ papers citing this work (Google scholar)

What Was Written vs. Who Read It: News Media Profiling Using Text Analysis and Social Media Context

Ramy Baly, Georgi Karadzhov, Jisun An, Haewoon Kwak, Yoan Dinkov, Ahmed Ali, James Glass, Preslav Nakov

Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL) (2020)

55+ papers citing this work (Google scholar)

Empirical Evaluation of Three Common Assumptions in Building Political Media Bias Datasets

Soumen Ganguly, Juhi Kulshrestha, Jisun An, Haewoon Kwak

Proceedings of the 14th International AAAI Conference on Web and Social Media (ICWSM), 2020

25+ papers citing this work (Google scholar)

“Trust Me, I Have a Ph.D.”: A Propensity Score Analysis on the Halo Effect of Disclosing One’s Offline Social Status in Online Communities

Kunwoo Park, Haewoon Kwak, Hyunho Song, Meeyoung Cha

Proceedings of the 14th International AAAI Conference on Web and Social Media (ICWSM), 2020

15+ papers citing this work (Google scholar)

Are These Comments Triggering? Predicting Triggers of Toxicity in Online Discussions

Hind Almerekhi, Haewoon Kwak, Bernard Jim Jansen, Joni Salminen (short)

Proceedings of The Web Conference (WWW), 2020

50+ papers citing this work (Google scholar)

Tanbih: Get To Know What You Are Reading

Yifan Zhang, Giovanni Da San Martino, Alberto Barrón-Cedeño, Salvatore Romeo, Jisun An, Haewoon Kwak, Todor Staykovski, Israa Jaradat, Georgi Karadzhov, Ramy Baly, Kareem Darwish, James Glass, Preslav Nakov (demo)

Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 2019

Predicting Audience Engagement Across Social Media Platforms in the News Domain

Kholoud Khalil Aldous, Jisun An, Bernard J. Jansen

Proceedings of Social Informatics (SocInfo), 2019

Assessing the Accuracy of Four Popular Face Recognition Tools for Inferring Gender, Age, and Race

Soon-gyo Jung, Jisun An, Haewoon Kwak, Joni Salminen, Bernard Jim Jansen

Proceedings of the 12th International AAAI Conference on Web and Social Media (ICWSM), 2018 (short)

65+ papers citing this work (Google scholar)

SemAxis: A Lightweight Framework to Characterize Domain-Specific Word Semantics Beyond Sentiment

Jisun An, Haewoon Kwak, Yong-Yeol Ahn

Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), 2018

50+ papers citing this work (Google scholar)

Revealing the Hidden Patterns of News Photos: Analysis of Millions of News Photos Using GDELT and Deep Learning-based Vision APIs

Haewoon Kwak, Jisun An

ICWSM Workshop on NEws and publiC Opinion (NECO), 2016

Picked as The Best of the Physics arXiv (week ending March 26, 2016) in MIT Technology Review

20+ papers citing this work (Google scholar)

Two Tales of the World: Comparison of Widely Used World News Datasets: GDELT and EventRegistry

Haewoon Kwak, Jisun An

Proceeding of the 10th International Conference on Web and Social Media (ICWSM), 2016 (short)