Publications

A list of selected papers in which research team members participated.

For a full list see below or go to Google Scholar (Jisun An and Haewoon Kwak).

computational journalism

political science

network science

game analytics

AI/ML/NLP

HCI

online harm

dataset/tool

bias/fairness

user engagement

computational journalism AI/ML/NLP online harm bias/fairness

How can we spot fake news? We go beyond single articles to evaluate the credibility of entire news outlets. Discover the present and future of new technologies that can rapidly identify sources of disinformation by jointly analyzing their factuality and political bias.

Preslav Nakov, Jisun An, Haewoon Kwak, Muhammad Arslan Manzoor, Zain Muhammad Mujahid, Husrev Taha Sencar

AI/ML/NLP online harm

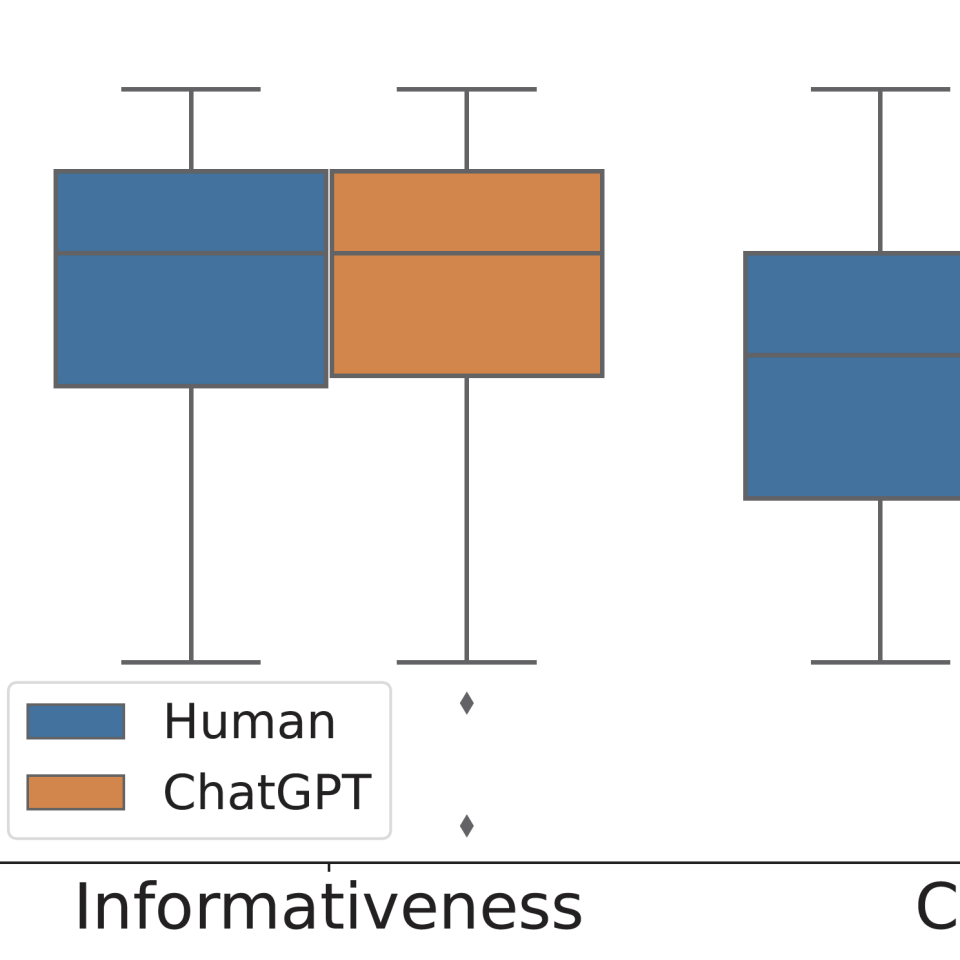

Recent studies have alarmed that many online hate speeches are implicit. With its subtle nature, the explainability of the detection of such hateful speech has been a challenging problem. In this work, we examine whether ChatGPT can be used for providing natural language explanations (NLEs) for implicit hateful speech detection. We design our prompt to elicit concise ChatGPT-generated NLEs and conduct user studies to evaluate their qualities by comparison with human-generated NLEs. We discuss the potential and limitations of ChatGPT in the context of implicit hateful speech research.

Fan Huang, Haewoon Kwak, Jisun An

AI/ML/NLP online harm

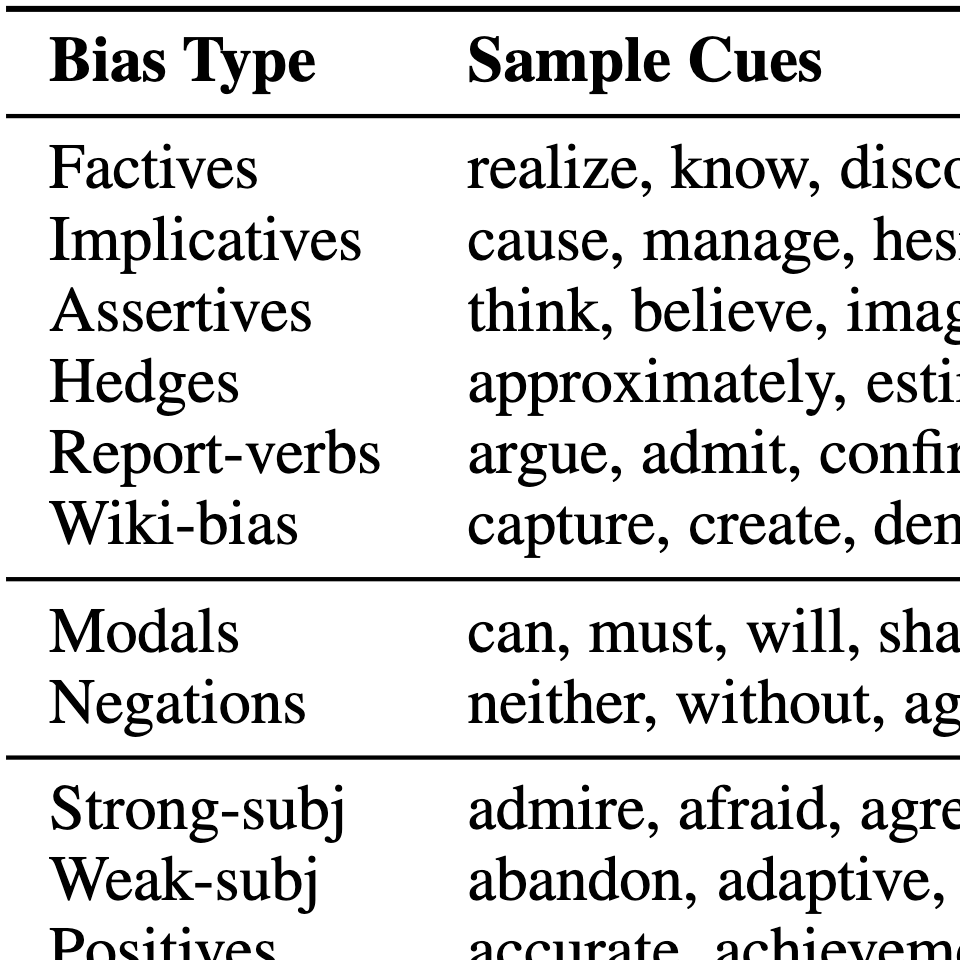

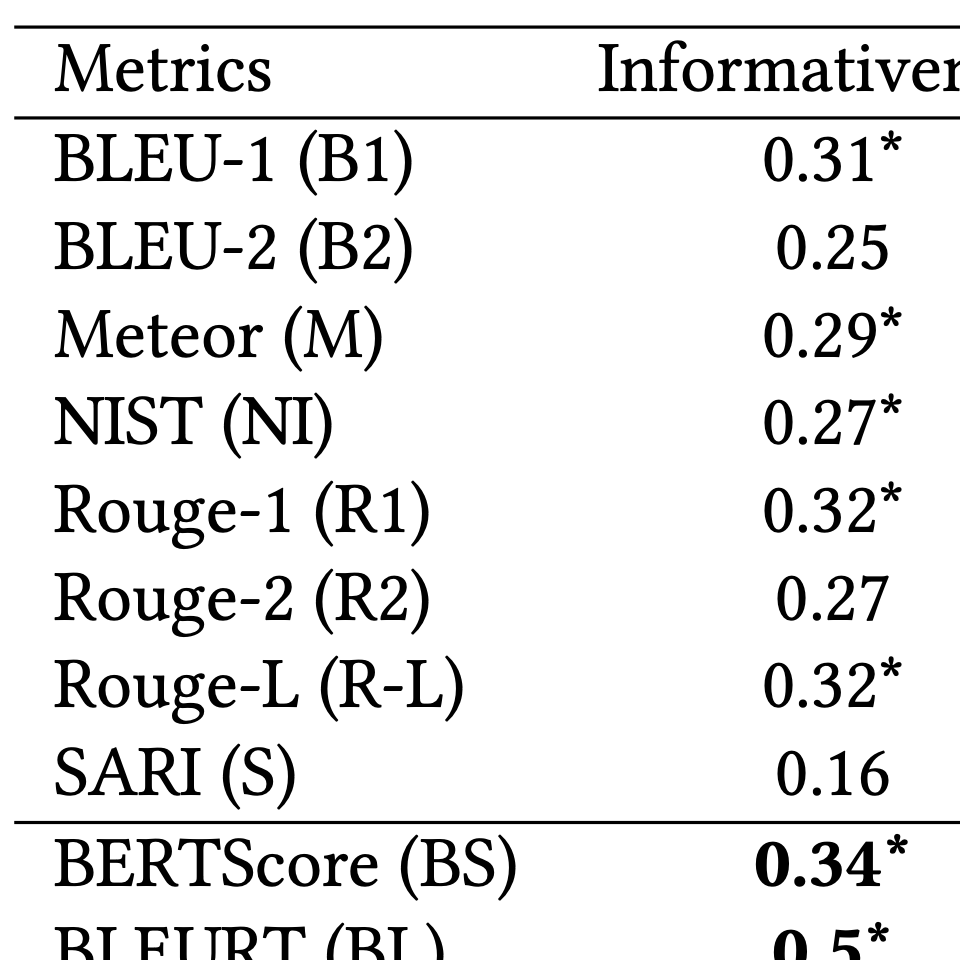

Recent studies have exploited advanced generative language models to generate Natural Language Explanations (NLE) for why a certain text could be hateful. We propose the Chain of Explanation (CoE) Prompting method, using the heuristic words and target group, to generate high-quality NLE for implicit hate speech. We improved the BLUE score from 44.0 to 62.3 for NLE generation by providing accurate target information. We then evaluate the quality of generated NLE using various automatic metrics and human annotations of informativeness and clarity scores.

Fan Huang, Haewoon Kwak, Jisun An

computational journalism online harm

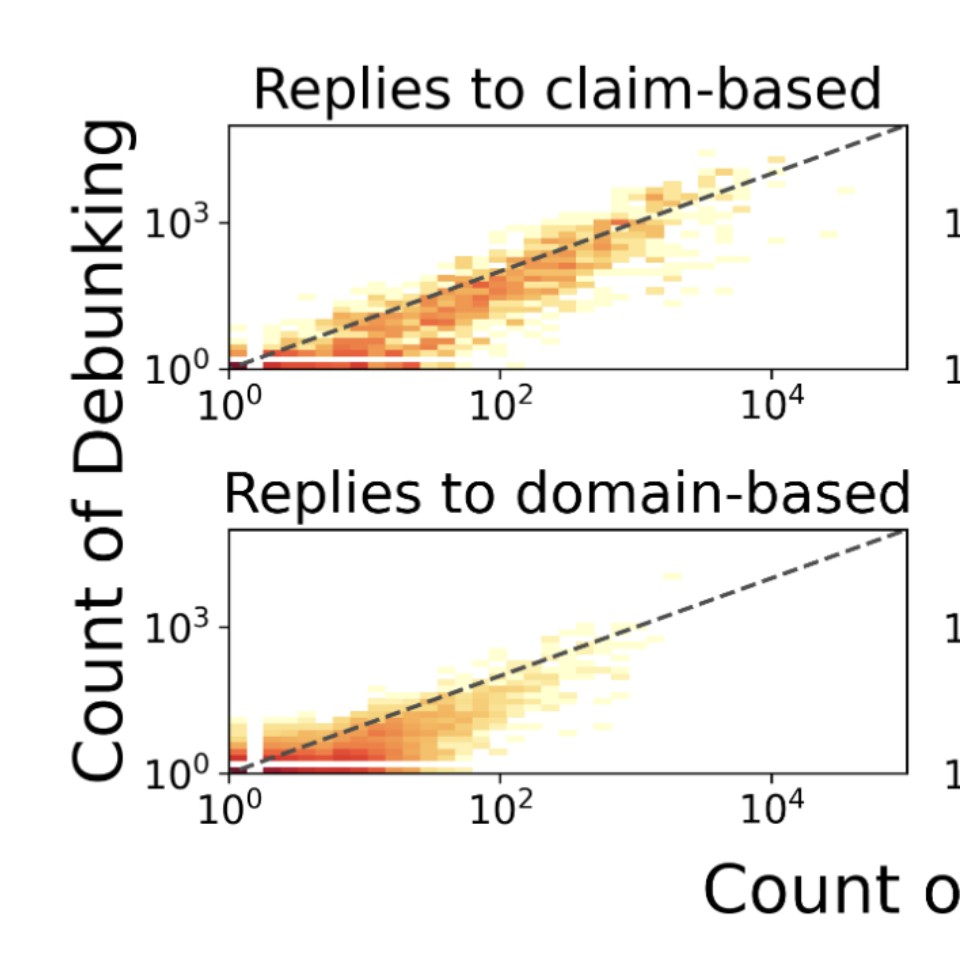

False information spreads on social media, and fact-checkingis a potential countermeasure. However, there is a severeshortage of fact-checkers; an efficient way to scale fact-checking is desperately needed, especially in pandemics likeCOVID-19. In this study, we focus on spontaneous debunk-ing by social media users, which has been missed in exist-ing research despite its indicated usefulness for fact-checkingand countering false information. Specifically, we character-ize the tweets with false information, or fake tweets, thattend to be debunked and Twitter users who often debunk faketweets.

Kunihiro Miyazaki, Takayuki Uchiba, Kenji Tanaka, Jisun An, Haewoon Kwak, Kazutoshi Sasahara

AI/ML/NLP political science online harm

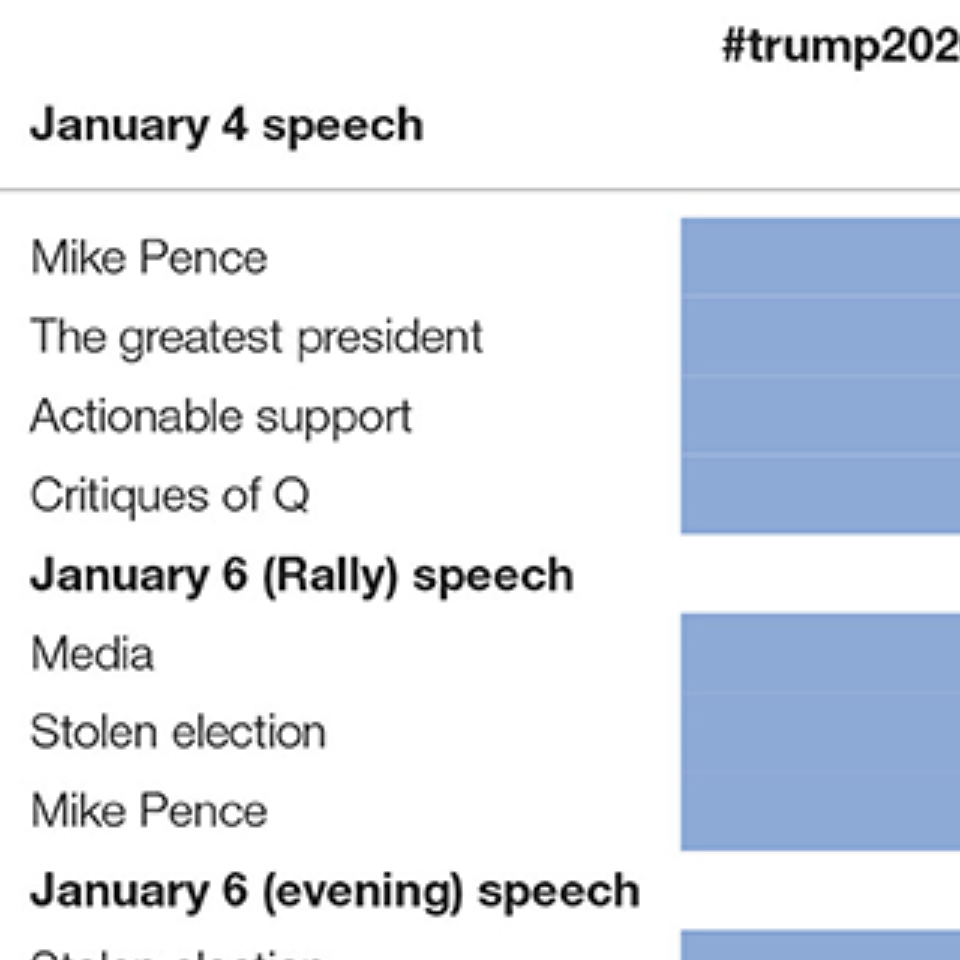

The transfer of power stemming from the 2020 presidential election occurred during an unprecedented period in United States history. Uncertainty from the COVID-19 pandemic, ongoing societal tensions, and a fragile economy increased societal polarization, exacerbated by the outgoing president’s offline rhetoric. As a result, online groups such as QAnon engaged in extra political participation beyond the traditional platforms. This research explores the link between offline political speech and online extra-representational participation by examining Twitter within the context of the January 6 insurrection. Using a mixed-methods approach of quantitative and qualitative thematic analyses, the study combines offline speech information with Twitter data during key speech addresses leading up to the date of the insurrection; exploring the link between Trump’s offline speeches and QAnon’s hashtags across a 3-day timeframe. We find that links between online extra-representational participation and offline political speech exist. This research illuminates this phenomenon and offers policy implications for the role of online messaging as a tool of political mobilization.

Claire Seungeun Lee, Juan Merizalde, John D. Colautti, Jisun An and Haewoon Kwak

Press coverage-PsyPost

AI/ML/NLP online harm

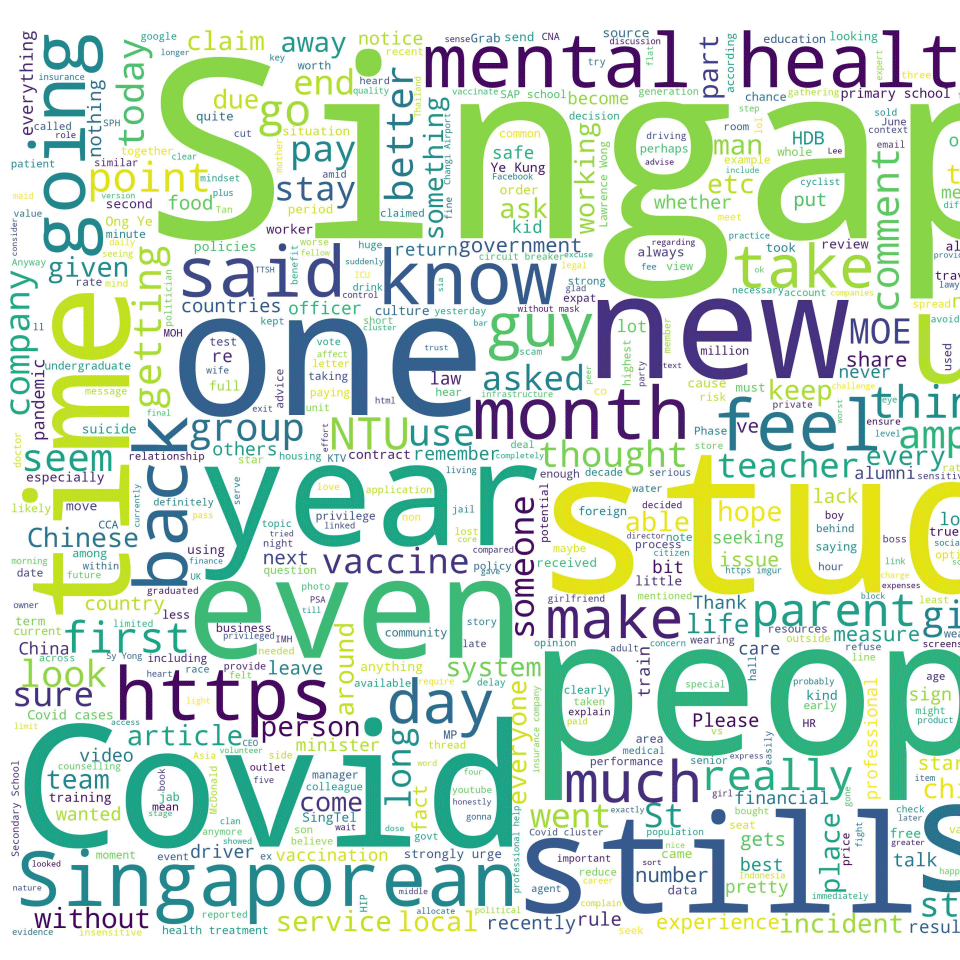

While the contagious nature of online toxicity sparked increasing interest in its early detection and prevention, most of the literature focuses on the Western world. In this work, we demonstrate that 1) it is possible to detect toxicity triggers in an Asian online community, and 2) toxicity triggers can be strikingly different between Western and Eastern contexts.

Yun Yu Chong, Haewoon Kwak

Proceedings of the 16th International AAAI Conference on Web and Social Media (ICWSM), 2022 (short)

Press coverage-AI Ethics Brief Newsletter by Montreal AI Ethics Institute

AI/ML/NLP online harm

We investigate predictors of anti-Asian hate among Twitter users throughout COVID-19. With the rise of xenophobia and polarization that has accompanied widespread social media usage in many nations, online hate has become a major social issue, attracting many researchers. Here, we apply natural language processing techniques to characterize social media users who began to post anti-Asian hate messages during COVID-19. We compare two user groups – those who posted anti-Asian slurs and those who did not – with respect to a rich set of features measured with data prior to COVID-19 and show that it is possible to predict who later publicly posted anti-Asian slurs. …

Jisun An, Haewoon Kwak, Claire Seungeun Lee, Bogang Jun, Yong-Yeol Ahn

Findings of the Association for Computational Linguistics EMNLP 2021

AI/ML/NLP online harm

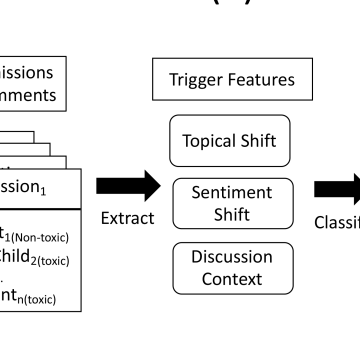

We define toxicity triggers in online discussions as a non-toxic comment that lead to toxic replies. Then, we build a neural network-based prediction model for toxicity trigger.

Hind Almerekhi, Haewoon Kwak, Bernard Jim Jansen, Joni Salminen (short)

Proceedings of The Web Conference (WWW), 2020

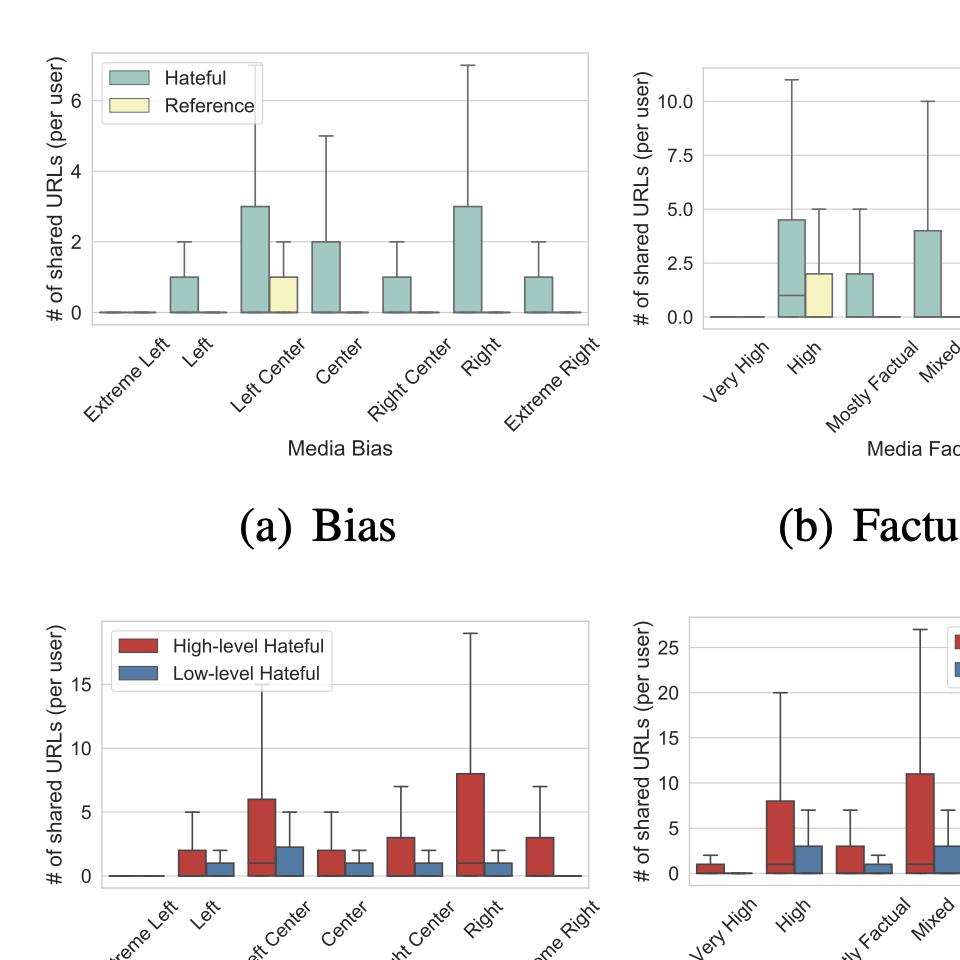

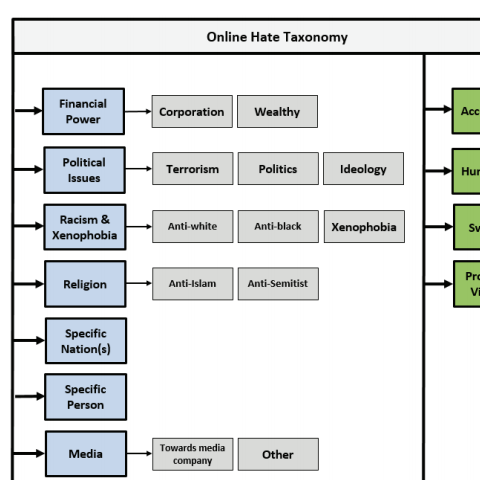

online harm

We manually label 5,143 hateful expressions posted to YouTube and Facebook videos among a dataset of 137,098 comments from an online news media. We then create a granular taxonomy of different types and targets of online hate and train machine learning models to automatically detect and classify the hateful comments in the full dataset.

Joni Salminen, Hind Almerekhi, Milica Milenković, Soon-gyo Jung, Jisun An, Haewoon Kwak, Bernard J. Jansen

Proceedings of the 12th International AAAI Conference on Web and Social Media (ICWSM), 2018

online harm

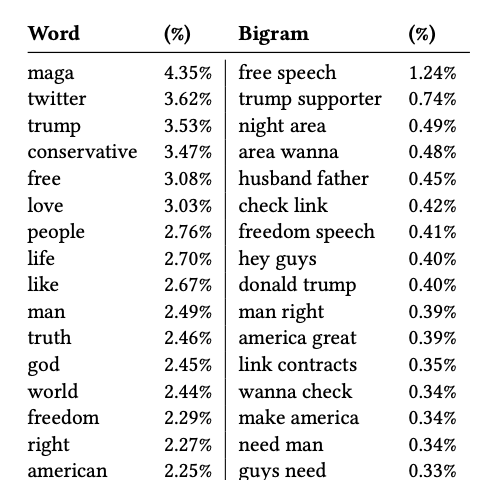

We provide, to the best of our knowledge, the first characterization of Gab. We collect and analyze 22M posts produced by 336K users between August 2016 and January 2018, finding that Gab is predominantly used for the dissemination and discussion of news and world events, and that it attracts alt-right users, conspiracy theorists, and other trolls

Savvas Zannettou, Barry Bradlyn, Emiliano De Cristofaro, Haewoon Kwak, Michael Sirivianos, Gianluca Stringhini, Jeremy Blackburn

Companion Proceedings of the The Web Conference (WWW), 2018

Press coverage-New Scientist, and Vice

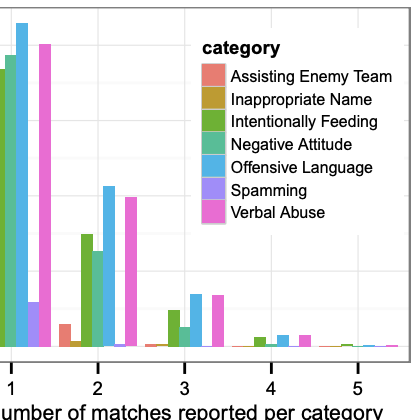

game analytics HCI online harm

In this work we explore cyberbullying and other toxic behavior in team competition online games. Using a dataset of over 10 million player reports on 1.46 million toxic players along with corresponding crowdsourced decisions, we test several hypotheses drawn from theories explaining toxic behavior. Besides providing large-scale, empirical based understanding of toxic behavior, our work can be used as a basis for building systems to detect, prevent, and counter-act toxic behavior.

Haewoon Kwak, Jeremy Blackburn, Seungyeop Han

Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI), 2015

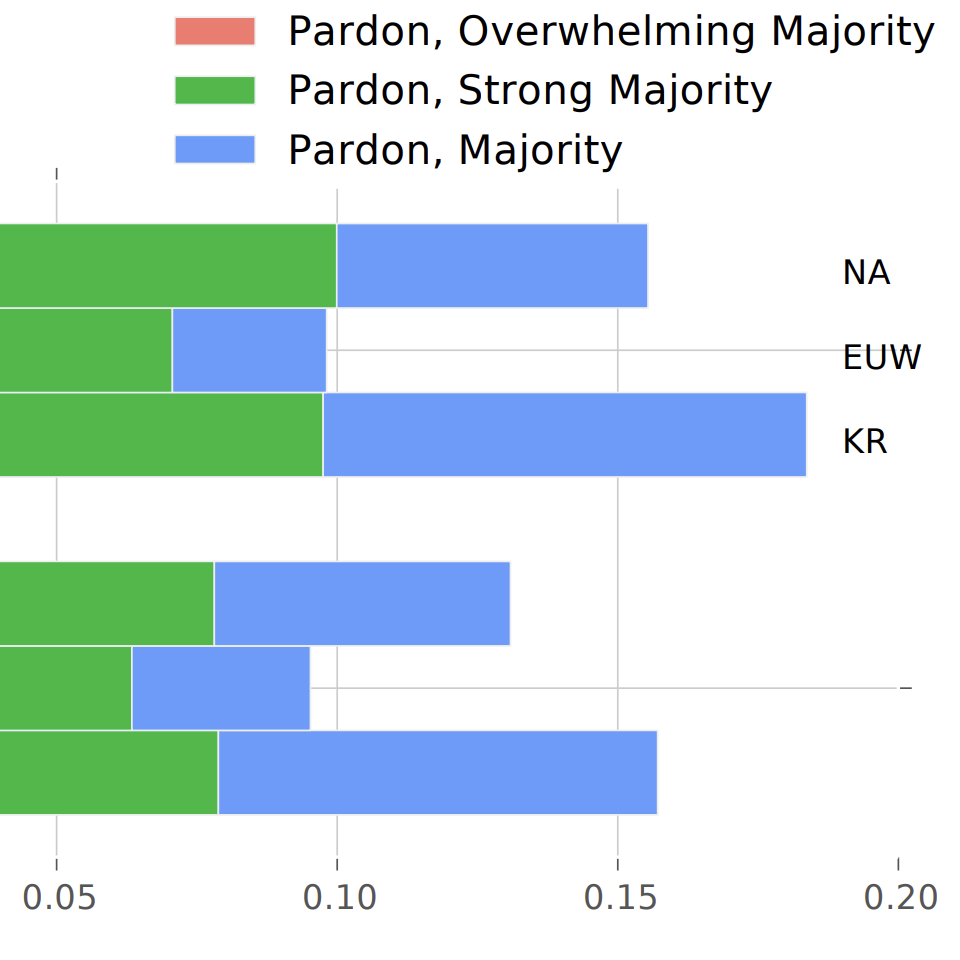

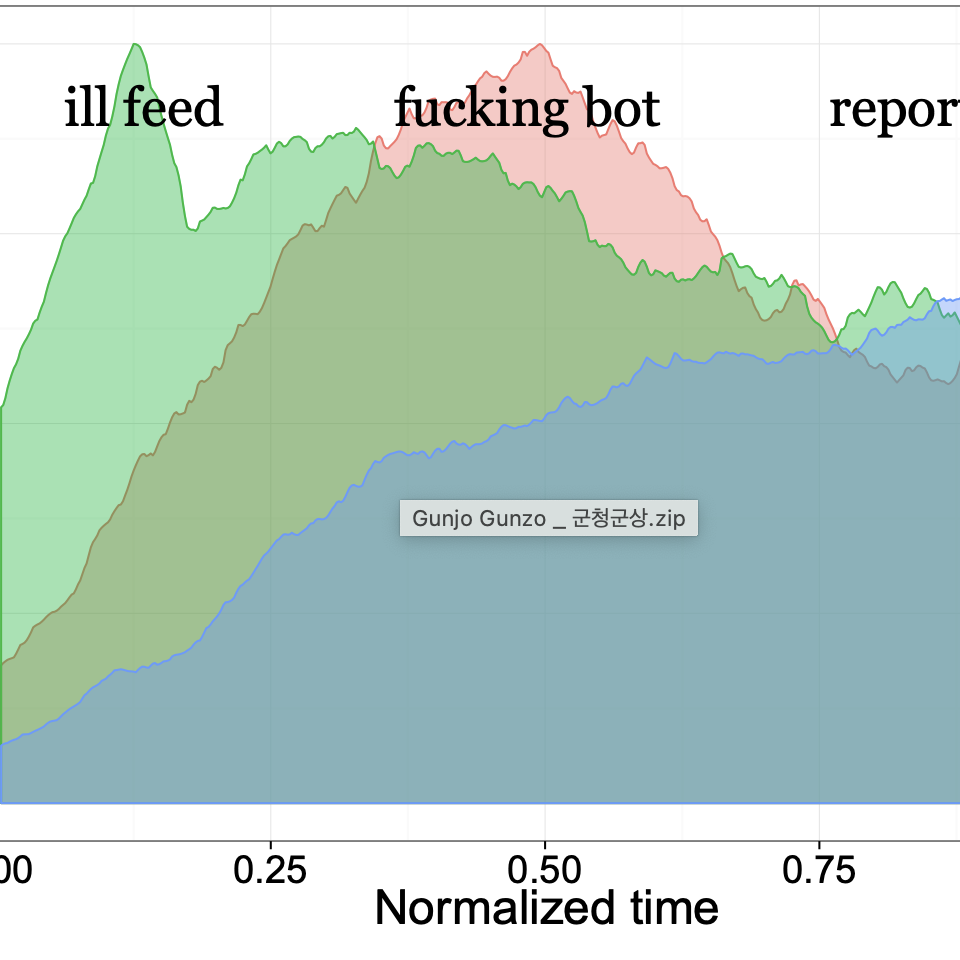

game analytics online harm

In this paper we explore the linguistic components of toxic behavior by using crowdsourced data from over 590 thousand cases of accused toxic players in a popular match-based competition game, League of Legends. We perform a series of linguistic analyses to gain a deeper understanding of the role communication plays in the expression of toxic behavior. We characterize linguistic behavior of toxic players and compare it with that of typical players in an online competition game. We also find empirical support describing how a player transitions from typical to toxic behavior. Our findings can be helpful to automatically detect and warn players who may become toxic and thus insulate potential victims from toxic playing in advance.

Haewoon Kwak, Jeremy Blackburn

SocInfo Workshop on Exploration on Games and Gamers (EGG), 2014

game analytics online harm

We propose a supervised learning approach for predicting crowdsourced decisions on toxic behavior with large-scale labeled data collections; over 10 million user reports involved in 1.46 million toxic players and corresponding crowdsourced decisions. Our result shows good performance in detecting overwhelmingly majority cases and predicting crowdsourced decisions on them. We demonstrate good portability of our classifier across regions.

Jeremy Blackburn, Haewoon Kwak

Proceedings of the 23rd international conference on World wide web (WWW), 2014

Press coverage-Nature, Scientific American, Chosun Ilbo

Full List

A Survey on Predicting the Factuality and the Bias of News Media

Preslav Nakov, Jisun An, Haewoon Kwak, Muhammad Arslan Manzoor, Zain Muhammad Mujahid, Husrev Taha Sencar

ACL Findings, 2024

15+ papers citing this work (Google scholar)

Is ChatGPT better than Human Annotators? Potential and Limitations of ChatGPT in Explaining Implicit Hate Speech

Fan Huang, Haewoon Kwak, Jisun An

WWW Companion, 2023

340+ papers citing this work (Google scholar)

Chain of Explanation: New Prompting Method to Generate Higher Quality Natural Language Explanation for Implicit Hate Speech

Fan Huang, Haewoon Kwak, Jisun An

WWW Companion, 2023

35+ papers citing this work (Google scholar)

YouNICon: YouTube’s CommuNIty of Conspiracy Videos

Shaoyi Liaw, Fan Huang, Fabricio Benevenuto, Haewoon Kwak, Jisun An

ICWSM Dataset, 2023

5+ papers citing this work (Google scholar)

‘This is Fake News’: Characterizing the Spontaneous Debunking from Twitter Users to COVID-19 False Information

Kunihiro Miyazaki, Takayuki Uchiba, Kenji Tanaka, Jisun An, Haewoon Kwak, Kazutoshi Sasahara

AAAI ICWSM, 2023

10+ papers citing this work (Google scholar)

Storm the Capitol: Linking Offline Political Speech and Online Twitter Extra-Representational Participation on QAnon and the January 6 Insurrection

Claire Seungeun Lee, Juan Merizalde, John D. Colautti, Jisun An and Haewoon Kwak

Frontiers in Sociology, 2022

Press coverage-PsyPost

35+ papers citing this work (Google scholar)

Understanding Toxicity Triggers on Reddit in the Context of Singapore

Yun Yu Chong, Haewoon Kwak

Proceedings of the 16th International AAAI Conference on Web and Social Media (ICWSM), 2022 (short)

Press coverage-AI Ethics Brief Newsletter by Montreal AI Ethics Institute

20+ papers citing this work (Google scholar)

Predicting Anti-Asian Hateful Users on Twitter during COVID-19

Jisun An, Haewoon Kwak, Claire Seungeun Lee, Bogang Jun, Yong-Yeol Ahn

Findings of the Association for Computational Linguistics EMNLP 2021

Code repo (github)

30+ papers citing this work (Google scholar)

Are These Comments Triggering? Predicting Triggers of Toxicity in Online Discussions

Hind Almerekhi, Haewoon Kwak, Bernard Jim Jansen, Joni Salminen (short)

Proceedings of The Web Conference (WWW), 2020

50+ papers citing this work (Google scholar)

Anatomy of Online Hate: Developing a Taxonomy and Machine Learning Models for Identifying and Classifying Hate in Online News Media

Joni Salminen, Hind Almerekhi, Milica Milenković, Soon-gyo Jung, Jisun An, Haewoon Kwak, Bernard J. Jansen

Proceedings of the 12th International AAAI Conference on Web and Social Media (ICWSM), 2018

140+ papers citing this work (Google scholar)

What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber?

Savvas Zannettou, Barry Bradlyn, Emiliano De Cristofaro, Haewoon Kwak, Michael Sirivianos, Gianluca Stringhini, Jeremy Blackburn

Companion Proceedings of the The Web Conference (WWW), 2018

Press coverage-New Scientist, and Vice

280+ papers citing this work (Google scholar)

Exploring Cyberbullying and Other Toxic Behavior in Team Competition Online Games

Haewoon Kwak, Jeremy Blackburn, Seungyeop Han

Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI), 2015

310+ papers citing this work (Google scholar)

Linguistic Analysis of Toxic Behavior in an Online Video Game

Haewoon Kwak, Jeremy Blackburn

SocInfo Workshop on Exploration on Games and Gamers (EGG), 2014

110+ papers citing this work (Google scholar)

STFU NOOB! Predicting Crowdsourced Decisions on Toxic Behavior in Online Games

Jeremy Blackburn, Haewoon Kwak

Proceedings of the 23rd international conference on World wide web (WWW), 2014

Press coverage-Nature, Scientific American, Chosun Ilbo

190+ papers citing this work (Google scholar)